OpenShift docs

OpenShift Container Platform¶

- OpenShift

- Red Hat’s approach to Kubernetes. Standardization

- OpenShift Container Platform 3 (OCP 3)

- OpenShift Cheat Sheets

- Helm Charts and OpenShift 3

- Chaos Monkey for kubernetes/Openshift

- OpenShift GitOps

- Debugging apps

- Capacity Management

- OpenShift High Availability

- Troubleshooting Java applications on Openshift

- Red Hat Communities of Practice. Uncontained.io Project

- Identity Management

- Quota Management

- Source-to-Image (S2I) Image Building Tools

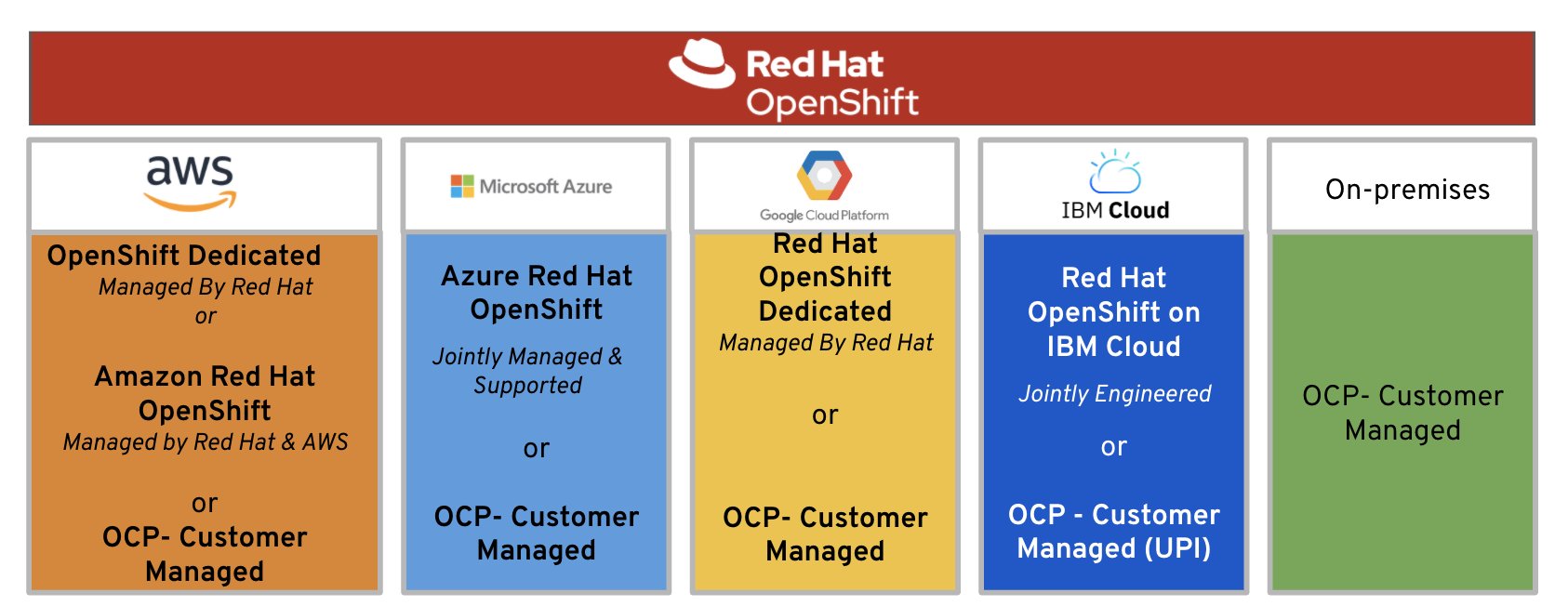

- OpenShift Container Platform 4 (OCP 4)

- OCP 4 Overview

- Three New Functionalities

- New Technical Components

- Installation & Cluster Autoscaler

- Cluster Autoscaler Operator

- Operators

- Introduction

- Catalog

- Certified Opeators, OLM Operators and Red Hat Operators

- Deploy and bind enterprise-grade microservices with Kubernetes Operators

- OpenShift Container Storage Operator (OCS)

- Cluster Network Operator (CNO) & Routers

- ServiceMesh Operator

- Serverless Operator (Knative)

- Crossplane Operator (Universal Control Plane API for Cloud Computing)

- Monitoring & Observability

- Build Images. Next-Generation Container Image Building Tools

- Registry & Quay

- Local Development Environment

- OpenShift Youtube

- OpenShift 4 Training

- OpenShift 4 Roadmap

- Kubevirt Virtual Machine Management on Kubernetes

- Storage in OCP 4. OpenShift Container Storage (OCS)

- Red Hat Advanced Cluster Management for Kubernetes

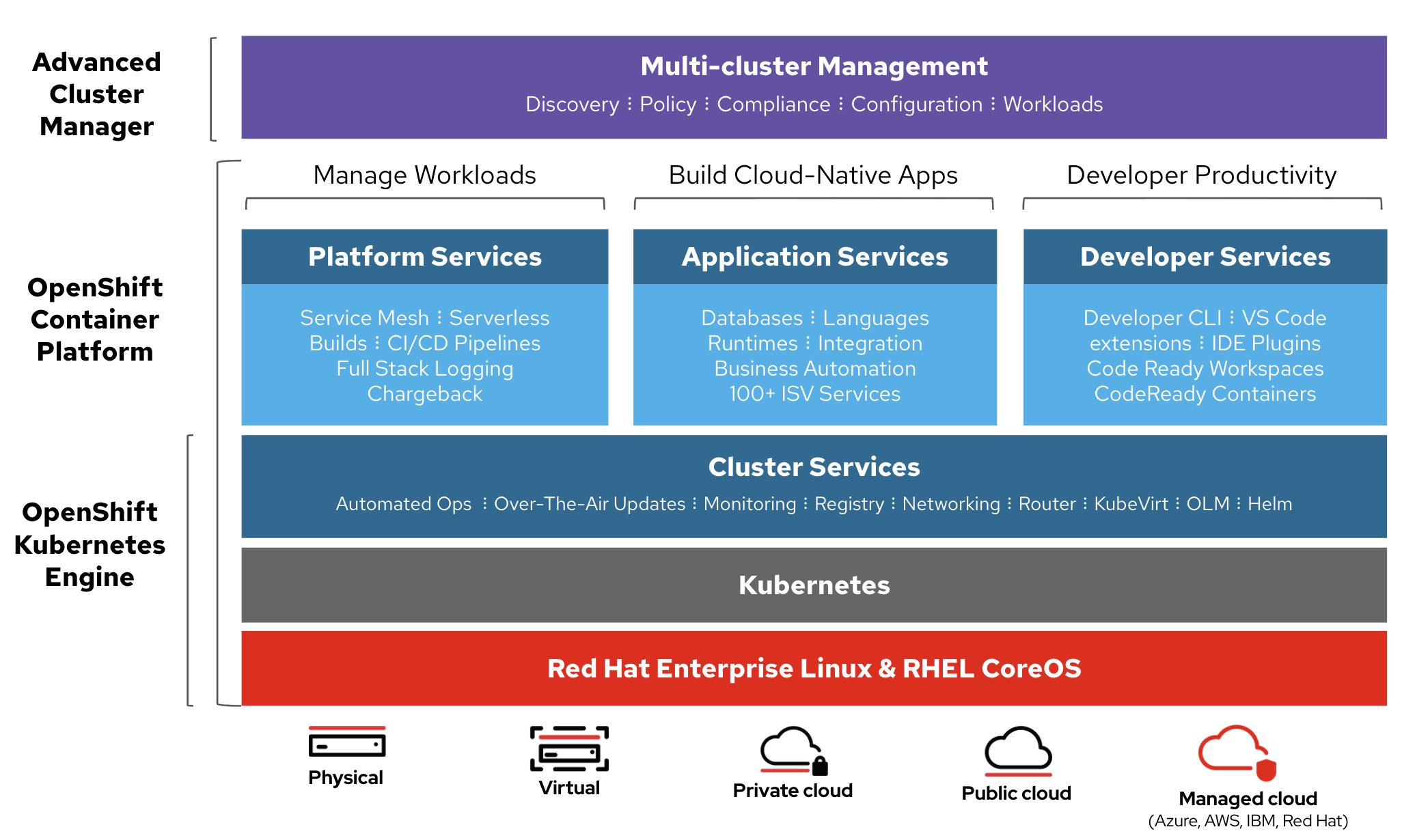

- OpenShift Kubernetes Engine (OKE)

- Red Hat CodeReady Containers. OpenShift 4 on your laptop

- OpenShift Hive: Cluster-as-a-Service. Easily provision new PaaS environments for developers

- OpenShift 4 Master API Protection in Public Cloud

- Backup and Migrate to OpenShift 4

- OKD4. OpenShift 4 without enterprise-level support

- OpenShift Serverless with Knative

- Helm Charts and OpenShift 4

- Red Hat Marketplace

- Kubestone. Benchmarking Operator for K8s and OpenShift

- OpenShift Cost Management

- Operators in OCP 4

- Quay Container Registry

- OpenShift Topology View

- OCP 4 Overview

- OpenShift.io online IDE

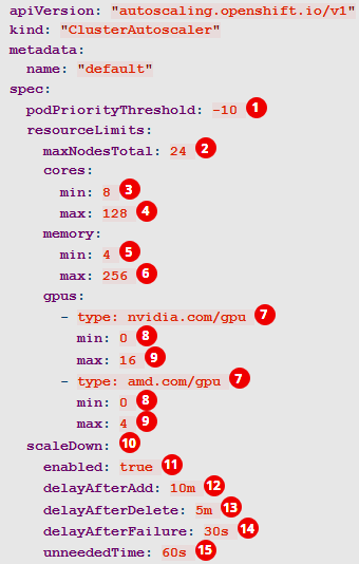

- Cluster Autoscaler in OpenShift

- e-Books

- Online Learning

- Local Installers

- Cloud Native Development Architecture. Architectural Diagrams

- Cluster Installers

- Networking (OCP 3 and OCP 4)

- Security

- Openshift Compliant Docker Images

- OpenShift on IBM Cloud. IBM Cloud Pak

- OpenShift on AWS

- Other Awesome Lists

- Videos

- Slides

OpenShift¶

- Wikipedia.org: OpenShift

- OpenShift.com

- OpenShift blog 🌟

- docs.openshift.com 🌟

- developers.redhat.com 🌟

- github.com/openshift/origin 🌟 Images for OpenShift 3 and 4 - see openshift/okd for more

- try.openshift.com 🌟 Create an OCP (OpenShift Container Platform) Cluster or OSD (OpenShift Dedicated) Cluster.

- okd.io The Community Distribution of Kubernetes that powers Red Hat OpenShift.

- OpenShift Commons Where users, partners, customers, and contributors come together to collaborate and work together on OpenShift. Commons builds connections and collaboration across OpenShift communities, projects and stakeholders.

- twitter.com/openshift

- OpenShift in DockerHub

- reddit.com/r/openshift

- reddit.com/r/redhat

Red Hat’s approach to Kubernetes. Standardization¶

| Reference | Author | URL |

|---|---|---|

| “Given the difficulty of navigating the cloud-native ecosystem, especially the one around Kubernetes, there is a high demand for easy-to-administer development platforms that deliver applications in Kubernetes-managed containers.” | OMDIA | Red Hat’s approach to Kubernetes |

| Industry momentum has aligned behind Kubernetes as the orchestration platform for Linux® containers. Choosing Kubernetes means you’ll be running the de facto standard regardless of which cloud environments and providers are in your future. | CNCF Survey 2019 | Red Hat’s approach to Kubernetes |

| “It’s not just enough to do Kubernetes. You do need to do CI/CD. You need to use alerting. You need to understand how the security model of the cloud and your applications interplay.” | Clayton Coleman,Senior Distinguished Engineer, Red Hat | Red Hat’s approach to Kubernetes |

| “Kubernetes is scalable. It helps develop applications faster. It does hybrid and multicloud. These are not just technology buzzwords, they’re real, legitimate business problems.” | Brian Gracely,Director, Product Strategy, Red Hat OpenShift | Red Hat’s approach to Kubernetes |

| “Our job is to make it easier and easier to use, either from an ops point of view or a developer point of view—while acknowledging it is complex, because we’re solving a complex problem.” | Chris Wright,Chief Technology Officer, Red Hat | Red Hat’s approach to Kubernetes |

OpenShift Container Platform 3 (OCP 3)¶

- Dzone.com: OpenShift Quick Start 🌟

- claydesk.com: Google Cloud App Engine Vs Red Hat OpenShift

- certdepot.net: OpenShift Free available resources 🌟

- blog.openshift.com: Using OpenShift 3 on your local environment 🌟

- developers.redhat.com: Securing .NET Core on OpenShift using HTTPS

- blog.openshift.com - Kubernetes: A Pod’s Life 🌟

- Container-native virtualization allows to run and manage virtual machine workloads alongside container workloads

- developers.redhat.com: Handling Angular environments in continuous delivery with Red Hat OpenShift

- developers.redhat.com: Customizing OpenShift project creation 🌟

- developers.redhat.com: Testing memory-based horizontal pod autoscaling on OpenShift 🌟

- How to Run HA Elasticsearch (ELK) on Red Hat OpenShift

OpenShift Cheat Sheets¶

Helm Charts and OpenShift 3¶

- blog.openshift.com: From Templates to Openshift Helm Charts

- Templating on OpenShift: should I use Helm templates or OpenShift templates? 🌟

Chaos Monkey for kubernetes/Openshift¶

- reddit: Help with Kube Monkey setup

- GitHub: kube-monkey

- GitHub: monkey-ops, Openshift compliant, no cluster-admin required

- chaoskube periodically kills random pods in your Kubernetes cluster

- Chaos Mesh

OpenShift GitOps¶

- blog.openshift.com: Introduction to GitOps with OpenShift

- learn.openshift.com: GitOps introduction

- blog.openshift.com: is it too late to integrate GitOps?

- blog.openshift.com: OpenShift Authentication Integration with ArgoCD

- openshift.com: From Code to Production with GitOps, Tekton and ArgoCD 🌟

Debugging apps¶

- developers.redhat.com: Installing debugging tools into a Red Hat OpenShift container with oc-inject

- developers.redhat.com: Debugging applications within Red Hat OpenShift containers

Capacity Management¶

- blog.openshift.com/full-cluster-capacity-management-monitoring-openshift

- blog.openshift.com/full-cluster-part-2-protecting-nodes

- full-cluster-part-3-capacity-management

- blog.openshift.comhow-full-is-my-cluster-part-4-right-sizing-pods-with-vertical-pod-autoscaler

- blog.openshift.com/how-full-is-my-cluster-part-5-a-capacity-management-dashboard

OpenShift High Availability¶

- blog.openshift.com/tag/multi-datacenter

- blog.openshift.com: How to survive an outage and live to tell about it!

- blog.openshift.com: Stateful Workloads and the Two Data Center Conundrum

- OpenShift 3.11 Multi-cluster Federation

- Multi-cluster Federation in OpenShift 4 is called KubeFed

- Katacoda e-learning platform – Federated Clusters

- KubeFed Operator

Troubleshooting Java applications on Openshift¶

- developers.redhat.com: Troubleshooting java applications on openshift

- dzone: 8 Options for Capturing Thread Dumps

Red Hat Communities of Practice. Uncontained.io Project¶

- Red Hat Communities of Practice

- Uncontained.io began as a project in the Red Hat Container Community of Practice to share knowledge about OpenShift adoption with members of Red Hat’s Consulting organization.

- uncontained.io/articles/openshift-ha-installation

- uncontained.io/articles/openshift-and-the-org

- v1.uncontained.io: Red Hat Consulting DevOps And OpenShift Playbooks 🌟 Red Hat Consulting DevOps and OpenShift Playbooks are guides for implementing DevOps technical practices and container automation approaches using Red Hat commercial open source products, including OpenShift Enterprise 3. They are intended to reflect real-world experience delivering solutions through these processes and technologies.

Identity Management¶

Quota Management¶

- GitHub redhat-cop: OpenShift Toolkit - Quota Management 🌟

- OpenShift 4 Resource Management

- techbeatly.com: How to create, increase or decrease project quota

- Quotas setting per project

- Quotas setting across multiple projects

Source-to-Image (S2I) Image Building Tools¶

- Source-to-Image (S2I) Build

- Source-to-Image (S2I) is a tool for building reproducible, Docker-formatted container images. It produces ready-to-run images by injecting application source into a container image and assembling a new image. The new image incorporates the base image (the builder) and built source and is ready to use with the docker run command. S2I supports incremental builds, which re-use previously downloaded dependencies, previously built artifacts, etc.

OpenShift Container Platform 4 (OCP 4)¶

- blog.openshift.com: Introducing Red Hat OpenShift 4

- nextplatform.com: red hat flexes CoreOS muscle in openshift kubernetes platform

- OpenShift 4 documentation 🌟

- Dzone: What’s in OpenShift 4?

- blog.openshift.com: OpenShift 4 Install Experience

- operatorhub.io OperatorHub.io is a new home for the Kubernetes community to share Operators. Find an existing Operator or list your own today.

- cloudowski.com: Honest review of OpenShift 4 🌟

- Enabling OpenShift 4 Clusters to Stop and Resume Cluster VMs

- blog.openshift.com: Simplifying OpenShift Case Information Gathering Workflow: Must-Gather Operator (In the context of Red Hat OpenShift 4.x and Kubernetes, it is considered a bad practice to ssh into a node and perform debugging actions) 🌟

- blog.openshift.com: Configure the OpenShift Image Registry backed by OpenShift Container Storage

- blog.openshift.com: OpenShift Scale: Running 500 Pods Per Node 🌟

- blog.openshift.com: Enterprise Kubernetes with OpenShift (Part one) 🌟

- devclass.com: OpenShift 4.4 goes all out on mixed workloads, puts observability at devs’ fingertips 🌟

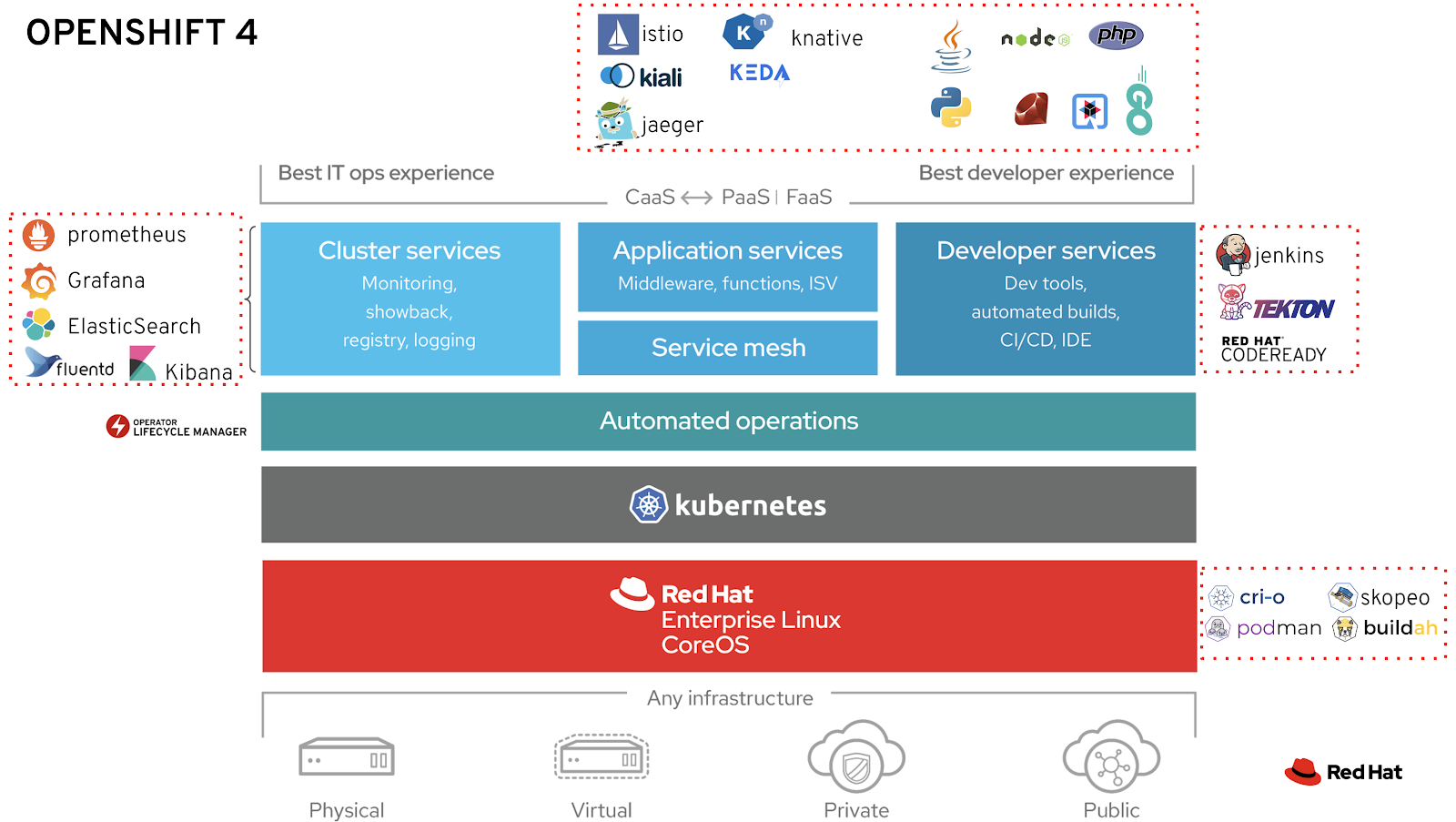

OCP 4 Overview¶

- Result of RedHat’s (now IBM) acquisition of CoreOS -> RHCOS (Red Hat Enterprise Linux CoreOS)

- Merge of two leading Kubernetes distributions, Tectonic and OpenShift:

- CoreOS Tectonic:

- Operator Framework

- quay.io container build and registry service

- Stable tiny Linux distribution with ignition bootstrap and transaction-based update engine.

- OpenShift:

- Wide enterprise adoption

- Security

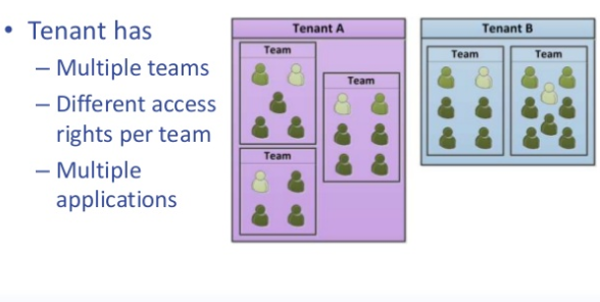

- Multi-tenancy features (self-service)

- CoreOS Tectonic:

- OpenShift 4 is built on top of Kubernetes 1.13+

- Roadmap

- Release Notes

Three New Functionalities¶

- Self-Managing Platform

- Application Lifecycle Management (OLM):

- OLM Operator:

- Responsible for deploying applications defined by ClusterServiceVersion (CSV) manifest.

- Not concerned with the creation of the required resources; users can choose to manually create these resources using the CLI, or users can choose to create these resources using the Catalog Operator.

- Catalog Operator:

- Responsible for resolving and installing CSVs and the required resources they specify. It is also responsible for watching CatalogSources for updates to packages in channels and upgrading them (optionally automatically) to the latest available versions.

- A user that wishes to track a package in a channel creates a Subscription resource configuring the desired package, channel, and the CatalogSource from which to pull updates. When updates are found, an appropriate InstallPlan is written into the namespace on behalf of the user.

- OLM Operator:

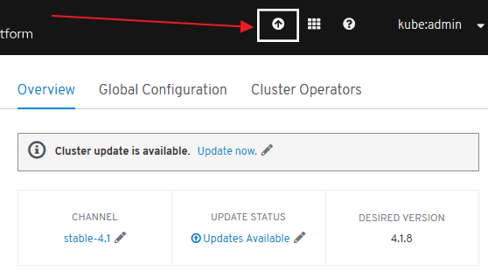

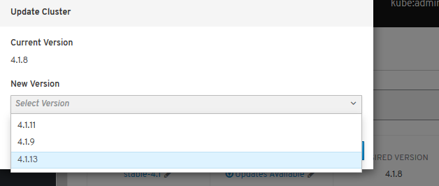

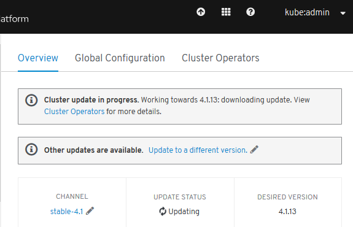

- Automated Infrastructure Management (Over-The-Air Updates)

New Technical Components¶

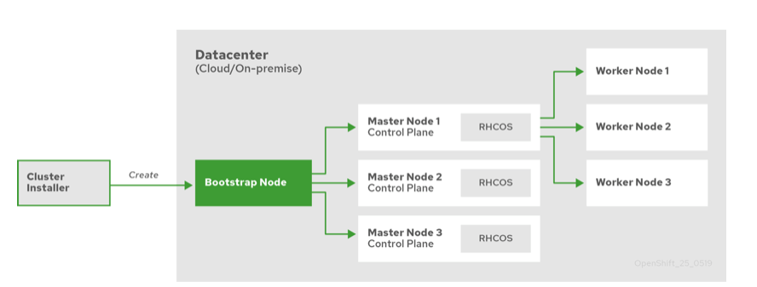

- New Installer:

- Storage: Cloud integrated storage capability used by default via OCS Operator (Red Hat)

- There are a number of persistent storage options available to you through the OperatorHub / Storage vendors that don’t involve Red Hat, NFS or Gluster.

- Kubernetes-native persistent storage technologies available (non-RedHat solutions):

- Operators End-To-End!: responsible for reconciling the system to the desired state

- Cluster configuration kept as API objects that ease its maintenance (“everything-as-code” approach):

- Every component is configured with Custom Resources (CR) that are processed by operators.

- No more painful upgrades and synchronization among multiple nodes and no more configuration drift.

- List of operators that configure cluster components (API objects):

- API server

- Nodes via Machine API

- Ingress

- Internal DNS

- Logging (EFK) and Monitoring (Prometheus)

- Sample applications

- Networking

- Internal Registry

- Oauth (and authentication in general)

- etc

- Cluster configuration kept as API objects that ease its maintenance (“everything-as-code” approach):

- At the Node Level:

- RHEL CoreOS is the result of merging CoreOS Container Linux and RedHat Atomic host functionality and is currently the only supported OS to host OpenShift 4.

- Node provisioning with ignition, which came with CoreOS Container Linux

- Atomic host updates with rpm-ostree

- CRI-O as a container runtime

- SELinux enabled by default

- Machine API: Provisioning of nodes. Abstraction mechanism added (API objects to declaratively manage the cluster):

- Based on Kubernetes Cluster API project

- Provides a new set of machine resources:

- Machine

- Machine Deployment

- MachineSet:

- distributes easily your nodes among different Availability Zones

- manages multiple node pools (e.g. pool for testing, pool for machine learning with GPU attached, etc)

- Everything “just another pod”

Installation & Cluster Autoscaler¶

- New installer openshift-install tool, replacement for the old Ansible scripts.

- 40 min (AWS). Terraform.

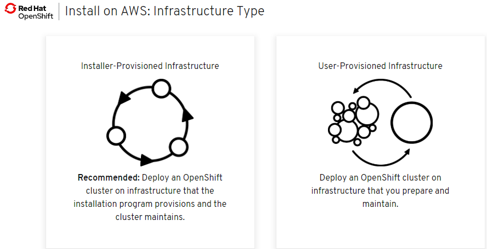

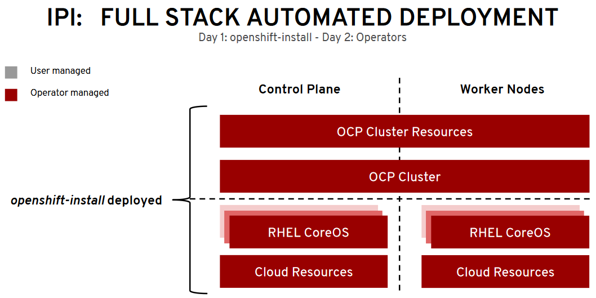

- 2 installation patterns:

- Installer Provisioned Infrastructure (IPI)

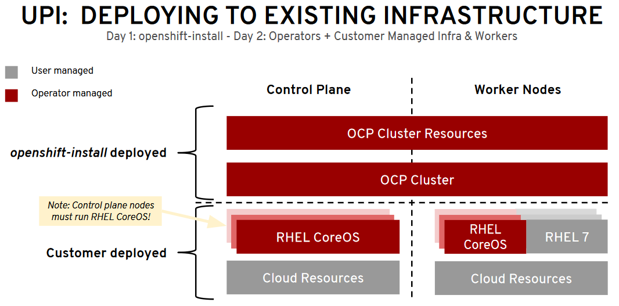

- User Provisioned Infrastructure (UPI)

- The whole process can be done in one command and requires minimal infrastructure knowledge (IPI):

openshift-install create cluster

IPI & UPI¶

- 2 installation patterns:

- Installer Provisioned Infrastructure (IPI): On supported platforms, the installer is capable of provisioning the underlying infrastructure for the cluster. The installer programmatically creates all portions of the networking, machines, and operating systems required to support the cluster. Think of it as best-practice reference architecture implemented in code. It is recommended that most users make use of this functionality to avoid having to provision their own infrastructure. The installer will create and destroy the infrastructure components it needs to be successful over the life of the cluster.

- User Provisioned Infrastructure (UPI): For other platforms or in scenarios where installer provisioned infrastructure would be incompatible, the installer can stop short of creating the infrastructure, and allow the platform administrator to provision their own using the cluster assets generated by the install tool. Once the infrastructure has been created, OpenShift 4 is installed, maintaining its ability to support automated operations and over-the-air platform updates.

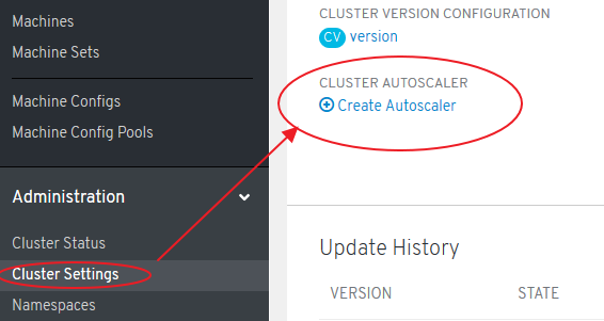

Cluster Autoscaler Operator¶

- Adjusts the size of an OpenShift Container Platform cluster to meet its current deployment needs. It uses declarative, Kubernetes-style arguments

- Increases the size of the cluster when there are pods that failed to schedule on any of the current nodes due to insufficient resources or when another node is necessary to meet deployment needs. The ClusterAutoscaler does not increase the cluster resources beyond the limits that you specify.

- A huge improvement over the manual, error-prone process used in the previous version of OpenShift and RHEL nodes.

Operators¶

Introduction¶

- Core of the platform

- The hierarchy of operators, with clusterversion at the top, is the single door for configuration changes and is responsible for reconciling the system to the desired state.

- For example, if you break a critical cluster resource directly, the system automatically recovers itself.

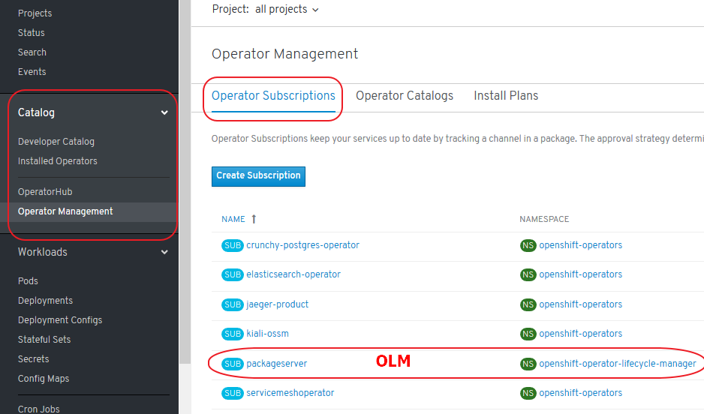

- Similarly to cluster maintenance, operator framework used for applications. As a user, you get SDK, OLM (Lifecycle Manager of all Operators and their associated services running across their clusters) and embedded operator hub.

- OLM Arquitecture

- Adding Operators to a Cluster (They can be added via CatalogSource)

- The supported method of using Helm charts with Openshift is via the Helm Operator

- twitter.com/operatorhubio

- View the list of Operators available to the cluster from the OperatorHub:

$ oc get packagemanifests -n openshift-marketplace

NAME AGE

amq-streams 14h

packageserver 15h

couchbase-enterprise 14h

mongodb-enterprise 14h

etcd 14h myoperator 14h

...

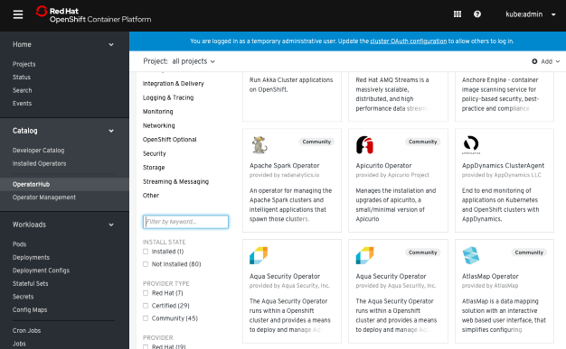

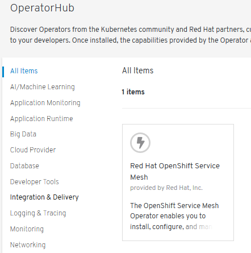

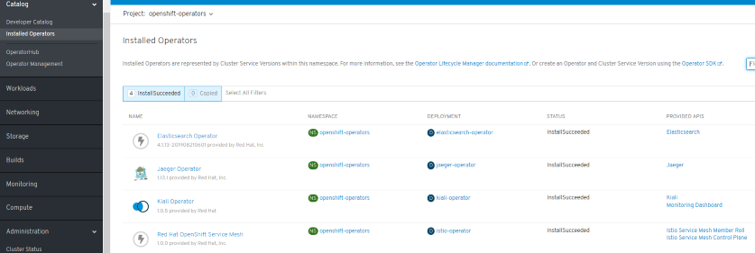

Catalog¶

- Developer Catalog

- Installed Operators

- OperatorHub (OLM)

- Operator Management:

- Operator Catalogs are groups of Operators you can make available on the cluster. They can be added via CatalogSource (i.e. “catalogsource.yaml”). Subscribe and grant a namespace access to use the installed Operators.

- Operator Subscriptions keep your services up to date by tracking a channel in a package. The approval strategy determines either manual or automatic updates.

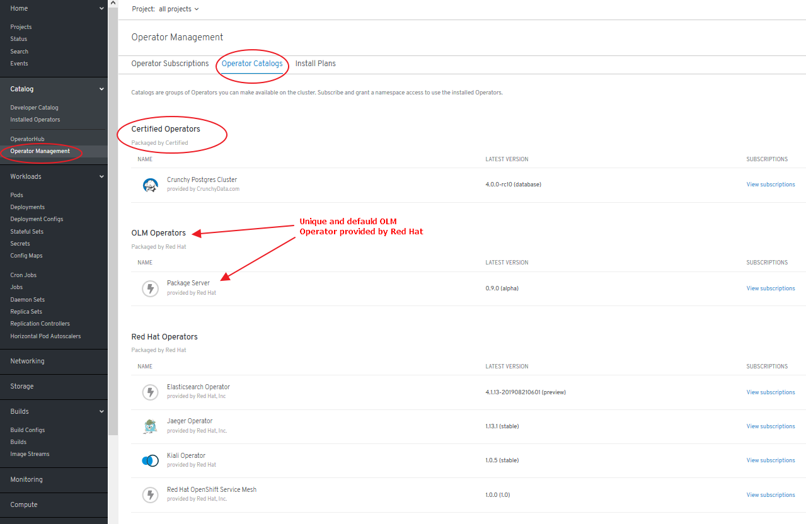

Certified Opeators, OLM Operators and Red Hat Operators¶

- Certified Operators packaged by Certified:

- Not provided by Red Hat

- Supported by Red Hat

- Deployed via “Package Server” OLM Operator

- OLM Operators:

- Packaged by Red Hat

- “Package Server” OLM Operator includes a CatalogSource provided by Red Hat

- Red Hat Operators:

- Packaged by Red Hat

- Deployed via “Package Server” OLM Operator

- Community Edition Operators:

- Deployed by any means

- Not supported by Red Hat

Deploy and bind enterprise-grade microservices with Kubernetes Operators¶

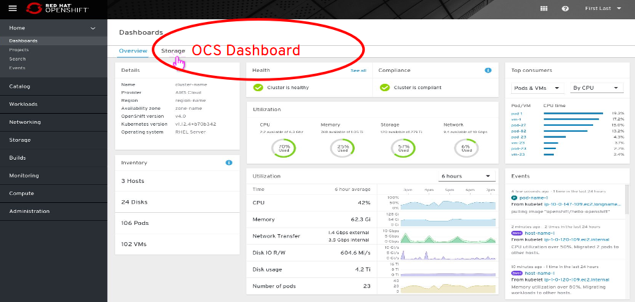

OpenShift Container Storage Operator (OCS)¶

OCS 3 (OpenShift 3)¶

- OpenShift Container Storage based on GlusterFS technology.

- Not OpenShift 4 compliant: Migration tooling will be available to facilitate the move to OCS 4.x (OpenShift Gluster APP Mitration Tool).

OCS 4 (OpenShift 4)¶

- OCS Operator based on Rook.io with Operator LifeCycle Manager (OLM).

- Tech Stack:

- Rook (don’t confuse this with non-redhat “Rook Ceph” -> RH ref).

- Replaces Heketi (OpenShift 3)

- Uses Red Hat Ceph Storage and Noobaa.

- Red Hat Ceph Storage

- Noobaa:

- Red Hat Multi Cloud Gateway (AWS, Azure, GCP, etc)

- Asynchronous replication of data between my local ceph and my cloud provider

- Deduplication

- Compression

- Encryption

- Rook (don’t confuse this with non-redhat “Rook Ceph” -> RH ref).

- Backups available in OpenShift 4.2+ (Snapshots + Restore of Volumes)

- OCS Dashboard in OCS Operator

Cluster Network Operator (CNO) & Routers¶

- Cluster Network Operator (CNO): The cluster network is now configured and managed by an Operator. The Operator upgrades and monitors the cluster network.

- Router plug-ins in OCP3:

- A « route » is the external entrypoint to a Kubernetes Service. This is one of the biggest differences between Kubernetes and OpenShift Enterprise (= OCP) and origin.

- OpenShift router has the endpoints as targets and therefore the pod of the application.

- Shared/Stikcy sessions are enabled by default

- HAProxy template router (default router): HTTP(s) & TLS-enabled traffic via SIN.

- F5 BIG-IP Router plug-in integrates with an existing F5 BIG-IP system in your environment

- Since the 9th May 2018, NGINX is also available as « router ».

- Routers in OCP4:

- Ingress Controller is the most common way to allow external access to an OpenShift Container Platform cluster

- Configuring Ingress Operator in OCP4

- Limited to HTTP, HTTPS using SNI, and TLS using SNI (sufficient for web applications and services)

- Has two replicas by default, which means it should be running on two worker nodes.

- Can be scaled up to have more replicas on more nodes.

- The Ingress Operator implements the ingresscontroller API and is the component responsible for enabling external access to OpenShift Container Platform cluster services.

- The operator makes this possible by deploying and managing one or more HAProxy-based Ingress Controllers to handle routing.

- Network Security Zones in Openshift (DMZ)

oc describe clusteroperators/ingress

oc logs --namespace=openshift-ingress-operator deployments/ingress-operator

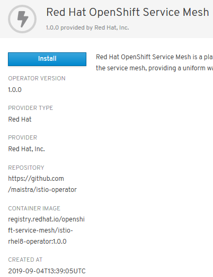

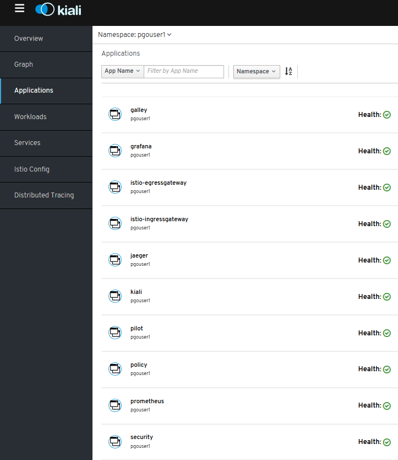

ServiceMesh Operator¶

- ServiceMesh: Istio + kiali + Jaeger

- ServiceMesh Community Edition: github.com/maistra/istio

- Red Hat community installer compliant with OCP 4.1: maistra.io/docs/getting_started/install

- Outcome: publicly known errors in 2 or 3 components.

- Certified ServiceMesh Operator

- ServiceMesh GA in September 2019 (available in OperatorHub):

- Certified & Packaged by Red Hat

- “One-click” deployment

- Preparing to install Red Hat OpenShift Service Mesh. To install the Red Hat OpenShift Service Mesh Operator, you must first install these Operators:

- Elasticsearch

- Jaeger

- Kiali

- Do not install Community versions of the Operators. Community Operators are not supported.

Serverless Operator (Knative)¶

- Operator install on OperatorHub.io

- Knative Eventing (Camel-K, Kafka, Cron, etc)

- Integration with Openshift ServiceMesh, Logging, Monitoring.

- openshift.com/learn/topics/serverless

- redhat-developer-demos.github.io/knative-tutorial

Crossplane Operator (Universal Control Plane API for Cloud Computing)¶

Monitoring & Observability¶

Grafana¶

- Integrated Grafana v5.4.3 (deployed by default):

- Monitoring -> Dashboards

- Project “openshift-monitoring”

- https://grafana.com/docs/v5.4/

Prometheus¶

- Integrated Prometheus v2.7.2 (deployed by default):

- Monitoring -> metrics

- Project “openshift-monitoring”

- https://prometheus.io/docs/prometheus/2.7/getting_started/

Alerts & Silences¶

- Integrated Alertmanager 0.16.2 (deployed by default):

- Monitoring -> Alerts

- Monitoring -> Silences

- Silences temporarily mute alerts based on a set of conditions that you define. Notifications are not sent for alerts that meet the given conditions.

- Project “openshift-monitoring”

- https://prometheus.io/docs/alerting/alertmanager/

Cluster Logging (EFK)¶

- EFK: Elasticsearch + Fluentd + Kibana

- Cluster Logging EFK not deployed by default

- As an OpenShift Container Platform cluster administrator, you can deploy cluster logging to aggregate logs for a range of OpenShift Container Platform services.

- The OpenShift Container Platform cluster logging solution requires that you install both the Cluster Logging Operator and Elasticsearch Operator. There is no use case in OpenShift Container Platform for installing the operators individually. You must install the Elasticsearch Operator using the CLI following the directions below. You can install the Cluster Logging Operator using the web console or CLI.

Deployment procedure based on CLI + web console:

- docs.openshift.com/container-platform/4.4/logging/cluster-logging-deploying.html

- Elasticsearch Operator must be installed in Project “openshift-operators-redhat”

- Cluster Logging Operator must be deployed in Project “openshift-logging”

- CatalogSourceConfig added to enable Elasticsearch Operator on the cluster

- etc.

| OCP Release | Elasticsearch | Fluentd | Kibana | EFK deployed by default |

|---|---|---|---|---|

| OpenShift 3.11 | 5.6.13.6 | 0.12.43 | 5.6.13 | No |

| OpenShift 4.1 | 5.6.16 | ? | 5.6.16 | No |

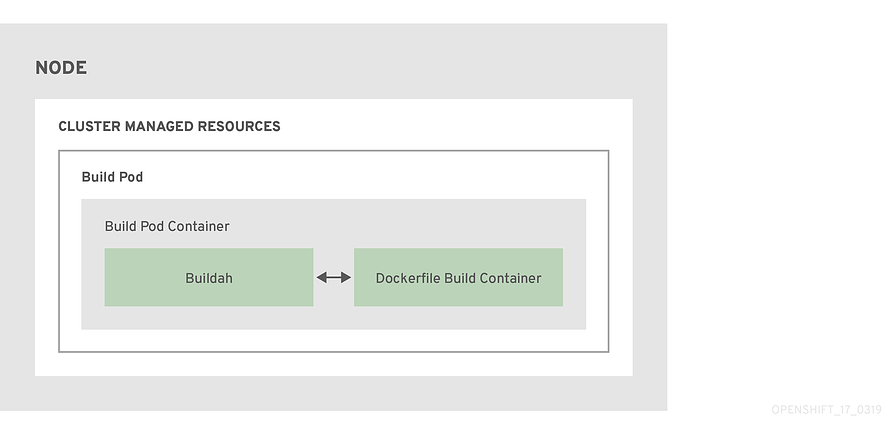

Build Images. Next-Generation Container Image Building Tools¶

- Redesign of how images are built on the platform.

- Instead of relying on a daemon on the host to manage containers, image creation, and image pushing, we are leveraging Buildah running inside our build pods.

- This aligns with the general OpenShift 4 theme of making everything “just another pod”

- A simplified set of build workflows, not dependent on the node host having a specific container runtime available.

- Dockerfiles that built under OpenShift 3.x will continue to build under OpenShift 4.x and S2I builds will continue to function as well.

- The actual BuildConfig API is unchanged, so a BuildConfig from a v3.x cluster can be imported into a v4.x cluster and work without modification.

- Podman & Buildah for docker users

- Openshift ImageStreams

- Openshift 4 image builds

- Custom image builds with Buildah

- Rootless podman and NFS

Registry & Quay¶

- A Docker registry is a place to store and distribute Docker images.

- It serves as a target for your docker push and docker pull commands.

- Openshift ImageStreams

- The registry is now managed by an Operator instead of

oc admregistry. - Quay.io is a hosted Docker registry from CoreOS:

- Main features:

- “Powerful build triggers”

- “Advanced team permissions”

- “Secure storage”

- One of the more enterprise-friendly options out there, offering fine-grained permission controls.

- They support any git server and let you build advanced workflows by doing things like mapping git branches to Docker tags so that when you commit code it automatically builds a corresponding image.

- Quay offers unlimited free public repositories. Otherwise, you pay by the number of private repositories. There’s no extra charge for storage or bandwidth.

- Main features:

- Quay 3.0 released in May 2019: support for multiple architectures, Windows containers, and a Red Hat Enterprise Linux (RHEL)-based image to this container image registry.

- Quay 3.1 released in September 2019: The newest Quay feature is repository mirroring, which complements our existing geographic replication features. Repository mirroring reflects content between distinct, different registries. With this, you can synchronize whitelisted repositories or a source registry subset into Quay. This makes it much easier to distribute images and related data through Quay.

- Quay Community Edition operator

- Quay 3.1 Certified Operator is not available in Openshift and must be purchased

- Open Source ProjectQuay.io Container Registry:

- Red Hat Introduces open source Project Quay container registry

- [github.com/quay](https://github.com/quay]

Local Development Environment¶

- For version 3 we have Container Development Kit (or its open source equivalent for OKD - minishift) which launches a single node VM with Openshift and it does it in a few minutes. It’s perfect for testing also as a part of CI/CD pipeline.

- Openshift 4 on your laptop: There is a working solution for single node OpenShift cluster. It is provided by a new project called CodeReady Containers.

- Procedure:

untar

crc setup

crc start

environment variables

oc login

OpenShift Youtube¶

- OpenShift Youtube

- youtube: Installing OpenShift 4 on AWS with operatorhub.io integration 🌟

- youtube: OpenShift 4 OAuth Identity Providers

- youtube: OpenShift on Google Cloud, AWS, Azure and localhost

- youtube: Getting Started with OpenShift 4 Security 🌟

- youtube playlist: London 2020 | OpenShift Commons Gathering 🌟 OCP4 Updates & Roadmaps, Customer Stories, OpenShift Hive (case study), Operator Ecosystem.

OpenShift 4 Training¶

OpenShift 4 Roadmap¶

- blog.openshift.com: OpenShift 4 Roadmap (slides) - this link may change

- blog.openshift.com: OpenShift Container Storage (OCS 3 & 4 slides)

- This link is now broken. Grab a copy from here

- blog.openshift.com: OpenShift 4 Roadmap Update (slides)

- This link is now broken. Grab a copy from here

Kubevirt Virtual Machine Management on Kubernetes¶

Storage in OCP 4. OpenShift Container Storage (OCS)¶

Red Hat Advanced Cluster Management for Kubernetes¶

OpenShift Kubernetes Engine (OKE)¶

Red Hat CodeReady Containers. OpenShift 4 on your laptop¶

- developers.redhat.com: Developing applications on Kubernetes 🌟

- Red Hat OpenShift 4.2 on your laptop: Introducing Red Hat CodeReady Containers

- dzone: Code Ready Containers - Decision Management Developer Tools Update

- Overview: running crc on a remote server

- dzone: Code Ready Containers: Installing Process Automation Learn how to make better use of Red Hat’s Code Ready Containers platform by installing process automation from a catalog.

OpenShift Hive: Cluster-as-a-Service. Easily provision new PaaS environments for developers¶

- OpenShift Hive is an operator which enables operations teams to easily provision new PaaSenvironments for developers improving productivity and reducing process burden due to internalIT regulations.

- blog.openshift.com: openshift hive cluster as a service

- youtube: how to deliver OpenShift as a service (just like Red Hat)

OpenShift 4 Master API Protection in Public Cloud¶

- blog.openshift.com: Introducing Red Hat OpenShift 4.3 to Enhance Kubernetes Security 🌟 OpenShift 4.3 adds new capabilities and platforms to the installer, helping customers to embrace their company’s best security practices and gain greater access control across hybrid cloud environments. Customers can deploy OpenShift clusters to customer-managed, pre-existing VPN / VPC (Virtual Private Network / Virtual Private Cloud) and subnets on AWS, Microsoft Azure and Google Cloud Platform. They can also install OpenShift clusters with private facing load balancer endpoints, not publicly accessible from the Internet, on AWS, Azure and GCP.

- containerjournal.com: Red Hat Delivers Latest Kubernetes Enhancements

- Create an OpenShift 4.2 Private Cluster in AWS 🌟

- cloud.ibm.com: openshift-security

- docs.aporeto.com: OpenShift Master API Protection

Backup and Migrate to OpenShift 4¶

OKD4. OpenShift 4 without enterprise-level support¶

- OKD.io: The Community Distribution of Kubernetes that powers Red Hat OpenShift.

- docs.okd.io 🌟

- GitHub: OKD4

- youtube.com: OKD4

- OKD4 Roadmap: The Road To OKD4: Operators, FCOS and K8S 🌟

- github.com: OKD 4 Roadmap

- youtube.com: How To Install OKD4 on GCP - Vadim Rutkovsky (Red Hat)

- blog.openshift.com: Guide to Installing an OKD 4.4 Cluster on your Home Lab

- okd4-upi-lab-setup: Building an OpenShift - OKD 4.X Lab Installing OKD4.X with User Provisioned Infrastructure. Libvirt, iPXE, and FCOS

- redhat.com: How to run a Kubernetes cluster on your laptop 🌟 Want containers? Learn how to set up and run a Kubernetes container cluster on your laptop with OKD.

OpenShift Serverless with Knative¶

- redhat.com: What is knative?

- developers.redhat.com: Serverless Architecture

- datacenterknowledge.com: Explaining Knative, the Project to Liberate Serverless from Cloud Giants

- Announcing OpenShift Serverless 1.5.0 Tech Preview – A sneak peek of our GA

- Serverless applications made faster and simpler with OpenShift Serverless GA

Helm Charts and OpenShift 4¶

- The supported method of using Helm charts with Openshift4 is via the Helm Operator

- youtube

- blog.openshift.com: Helm and Operators on OpenShift, Part 1

- blog.openshift.com: Helm and Operators on OpenShift, Part 2

Red Hat Marketplace¶

- marketplace.redhat.com 🌟

- developers.redhat.com: Building Kubernetes applications on OpenShift with Red Hat Marketplace

Kubestone. Benchmarking Operator for K8s and OpenShift¶

OpenShift Cost Management¶

- blog.openshift.com: Tech Preview: Get visibility into your OpenShift costs across your hybrid infrastructure 🌟

- Cost Management and OpenShift - Sergio Ocón-Cárdenas (Red Hat) 🌟

Operators in OCP 4¶

- OLM operator lifecycle manager

- Top Kubernetes Operators

- operatorhub.io

- learn.crunchydata.com

- developers.redhat.com: Operator pattern: REST API for Kubernetes and Red Hat OpenShift 🌟

Quay Container Registry¶

- Red Hat Introduces open source Project Quay container registry

- Red Hat Quay

- projectquay.io

- quay.io

- GitHub Quay (OSS)

- blog.openshift.com: Introducing Red Hat Quay

- operatorhub.io/operator/quay

OpenShift Topology View¶

OpenShift.io online IDE¶

- openshift.io 🌟 an online IDE for building container-based apps, built for team collaboration.

Cluster Autoscaler in OpenShift¶

- OpenShift 3.11: Configuring the cluster auto-scaler in AWS

- OpenShift 4.4: Applying autoscaling to an OpenShift Container Platform cluster

e-Books¶

- O’Reilly Free Book: DevOps with OpenShift

- O’Reilly Free Book: Openshift for developers

- O’Reilly: Free ebook: Kubernetes Operators: Automating the Container Orchestration Platform

- Manning: Openshift in action

- Packt publishing: Learn Openshift

- O’Reilly: Free ebook: Knative Cookbook: Building Effective Serverless Applications with Kubernetes and OpenShift

- redhat.com Free ebook: Container Storage for Dummies

Kubernetes e-Books¶

Online Learning¶

- learn.openshift.com 🌟 Interactive Learning Portal

- katacoda.com 🌟 Interactive Learning and Training Platform for Software Engineers

- redhatgov.io

- udemy.com: Red Hat OpenShift With Jenkins: DevOps For Beginners

- udemy.com: OpenShift Enterprise v3.2 Installation and Configuration

- udemy.com: Ultimate Openshift (2018) Bootcamp by School of Devops 🌟 With Openshift Origin 3.10 / OKD 2018, Kubernetes, Jenkins Pipelines, Prometheus, Istio, Micro Services, PaaS

Local Installers¶

- developers.redhat.com: Red Hat Container Development Kit

- A few other options to use OKD locally include oc cluster up and minishift. These may be a better fit for your use case if you only need a quick throwaway environment.

- github.com/redhatdemocentral: OpenShift Container Platform Install Demo 🌟

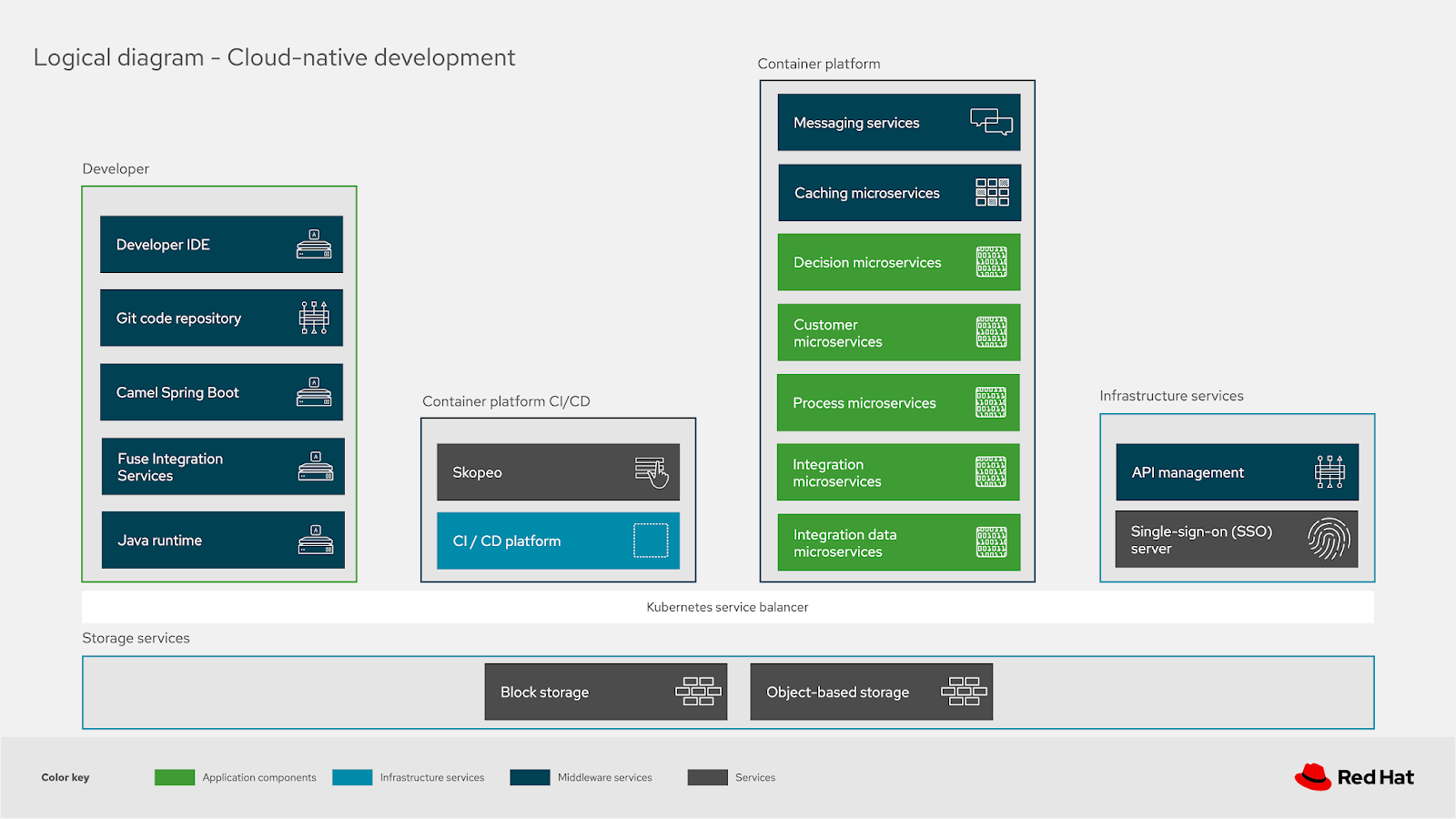

Cloud Native Development Architecture. Architectural Diagrams¶

- Cloud-native development is an approach to building and running applications to fully exploit the advantages of the cloud computing model (i.e. responsive, elastic and resilient applications).

- Dzone: Cloud-native development - A blueprint 🌟 These architectural blueprints are providing you with a way to implement a solution using open source technologies focusing on the integrations, structures and interactions proven to work.

- Dzone: Cloud-Native Development - Common Architectural Elements 🌟

- Portfolio Architecture WorkShops🌟 Workshops for creating impactful architectural diagrams. This workshop will teach you how to use, design, and create architectural diagrams based on the draw.io tooling and Red Hat Portfolio Architecture design elelements. You’ll leverage existing portfolio architecture diagrams as starting points.

- Portfolio Architecture Tooling

- gitlab.com: Portfolio Architecture Examples

Cluster Installers¶

OKD 3¶

- OKD.io: The Community Distribution of Kubernetes that powers Red Hat OpenShift.

- blog.openshift.com: Installing OKD 3.10 on a Single Host 🌟

- youtube.com: OpenShift Origin is now OKD. Installation of OKD 3.10 from start to finish

- Install RedHat OKD 3.10 on your development box: This repository is a set of scripts that will allow you easily install the latest version (3.10) of OKD in a single node fashion. What that means is that all of the services required for OKD to function (master, node, etcd, etc.) will all be installed on a single host. The script supports a custom hostname which you can provide using the interactive mode.]

- docs.okd.io: Planning your installation

OpenShift 3¶

OpenShift 4¶

- github.com/openshift/installer openshift installer 🌟

- CI/CD Pipeline to deploy OpenShift Container Platform 4.x to AWS 🌟

- blog.openshift.com: 9 steps to awesome with kubernetes openshift

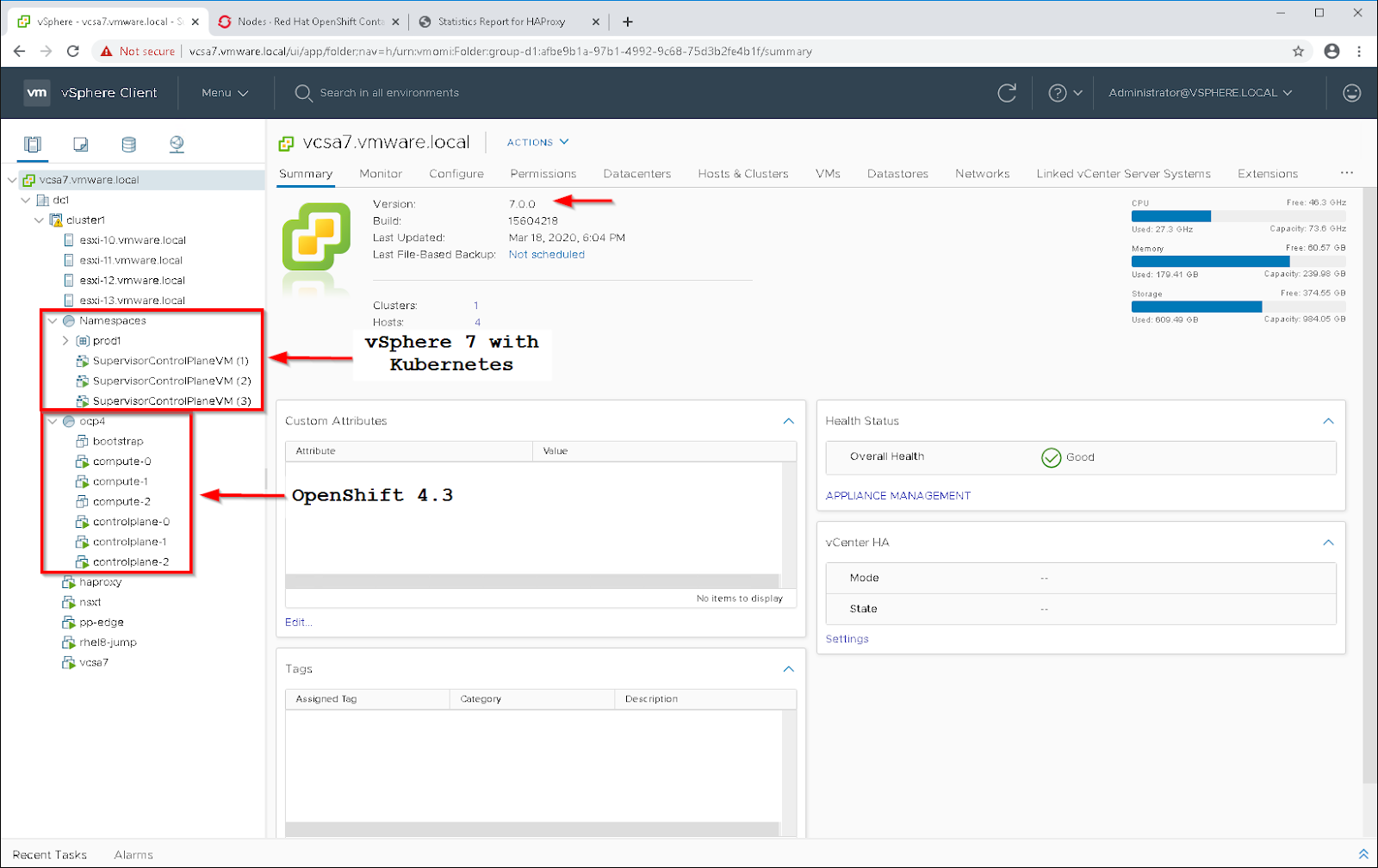

OpenShift 4 deployment on VMWare vSphere¶

- blog.openshift.com: OpenShift 4.2 vSphere Install Quickstart

- blog.openshift.com: OpenShift 4.2 vsphere install with static IPs 🌟

- youtube: Deploy OpenShift 4 to vSphere using OpenShift’s UPI

Deploying OpenShift 4.4 to VMware vSphere 7¶

Networking (OCP 3 and OCP 4)¶

- Using sidecars to analyze and debug network traffic in OpenShift and Kubernetes pods

- developers.redhat.com: Skupper.io: Let your services communicate across Kubernetes clusters

- blog.openshift.com: Troubleshooting OpenShift network performance with a netperf DaemonSet

- blog.openshift.com: Advanced Network customizations for OpenShift Install

Security¶

How is OpenShift Container Platform Secured?¶

- docs.openshift.com: OpenShift 3 Overview

- docs.openshift.com: OpenShift 3 Securing the Container Platform

- ocs.openshift.com: OpenShift 4 Understanding Authentication

Security Context Constraints¶

- docs.openshift.com: Managing Security Context Constraints

- docs.openshift.com: Managing Security Context Constraints. Security Context Constraints

- Dzone: Understanding OpenShift Security Context Constraints

Review Security Context Constraints¶

- Security Context Constraints (SCCs) control what actions pods can perform and what resources they can access.

- SCCs combine a set of security configurations into a single policy object that can be applied to pods. These security configurations include, but are not limited to, Linux Capabilities, Seccomp Profiles, User and Group ID Ranges, and types of mounts.

- OpenShift ships with several SCCs. The most constrained is the restricted SCC, and the least constrained in the privileged SCC. The other SCCs provide intermediate levels of constraint for various use cases. The restricted SCC is granted to all authenticated users by default.

- The default SCC for most pods should be the restricted SCC. If required, a cluster administrator may allow certain pods to run with different SCCs. Pods should be run with the most restrictive SCC possible.

- Pods inherit their SCC from the Service Account used to run the pod. With the default project template, new projects get a Service Account named default that is used to run pods. This default service account is only granted the ability to run the restricted SCC.

- Recommendations:

- Use OpenShift’s Security Context Constraint feature, which has been contributed to Kubernetes as Pod Security Policies. PSPs are still beta in Kubernetes 1.10, 1.11, and 1.12.

- Use the restricted SCC as the default

- For pods that require additional access, use the SCC that grants the least amount of additional privileges or create a custom SCC Audit

- To show all available SCCs:

oc describe scc - To audit a single pod:

oc describe pod <POD> | grep openshift.io\/scc - Remediation: Apply the SCC with the least privilege required

OpenShift Network Model & Network Policy¶

- docs.openshift.com: Understanding networking

- NetworkPolicies and Microsegmentation

- Fully Automated Management of Egress IPs with the egressip-ipam-operator 🌟

- GitHub: redhat-cop OpenShift Toolkit Network Policy 🌟

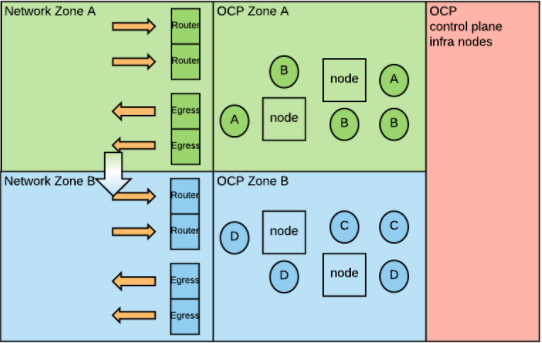

Network Security Zones¶

- stackoverflow.com: Is that possible to deploy an openshift or kubernetes in DMZ zone? 🌟

- OpenShift and Network Security Zones: Coexistence Approaches 🌟🌟🌟

- Introduction: Kubernetes and consequently OpenShift adopt a flat Software Defined Network (SDN) model, which means that all pods in the SDN are in the same logical network. Traditional network implementations adopt a zoning model in which different networks or zones are dedicated to specific purposes, with very strict communication rules between each zone. When implementing OpenShift in organizations that are using network security zones, the two models may clash. In this article, we will analyze a few options for coexistence. But first, let’s understand the two network models a bit more in depth.

- Network Zones have been the widely accepted approach for building security into a network architecture. The general idea is to create separate networks, each with a specific purpose. Each network contains devices with similar security profiles. Communications between networks is highly scrutinized and controlled by firewall rules (perimeter defense).

- Conclusion: A company’s security organization must be involved when deciding how to deploy OpenShift with regard to traditional network zones. Depending on their level of comfort with new technologies you may have different options. If physical network separation is the only acceptable choice, you will have to build a cluster per network zone. If logical network type of separations can be considered, then there are ways to stretch a single OpenShift deployment across multiple network zones. This post presented a few technical approaches.

OpenShift Route and OpenShift Ingress¶

- openshift.com: Kubernetes Ingress vs OpenShift Route

- Ingress Operator in OCP 4

- cloud.ibm.com: OpenShift Ingress

OpenShift Egress¶

- Accessing External Services Using Egress Router

- How to Enable Static Egress IP in OCP

- dzone: OpenShift Egress Options Network security is a crucial part of any of Software as a Service type business. Read on to see how to implement OpenShift to create better network security.

Openshift Compliant Docker Images¶

- Red Hat Container Catalog - RedHat Registry (registry.redhat.io) 🌟 License required

- DockerHub OpenShift

- github.com/sclorg/

- github.com/sclorg/postgresql-container/

- github.com/sclorg/mariadb-container

Gitlab¶

Atlassian Confluence6¶

Sonatype Nexus 3¶

Rocket Chat¶

OpenShift on IBM Cloud. IBM Cloud Pak¶

- cloudpak8s.io 🌟 IBM Cloud™ Paks are enterprise-ready, containerized software solutions that give clients an open, faster and more secure way to move core business applications to any cloud.

- IBM Cloud Pak Playbook 🌟

OpenShift on AWS¶

- blog.openshift.com: AWS and red hat quickstart workshop

- aws.amazon.com: AWS Quick Start (OpenShift 3.11 on AWS) View deployment guide