Kubernetes

Kubernetes¶

- Certified Kubernetes Offerings

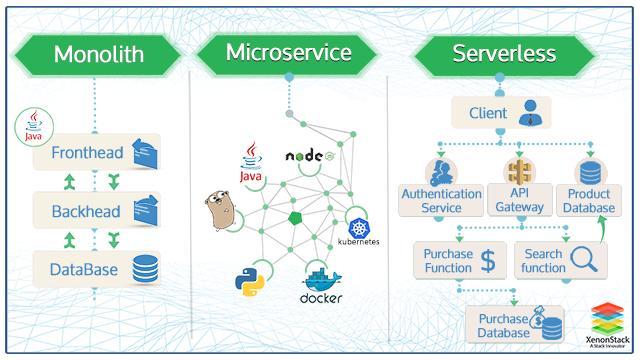

- The State of Cloud-Native Development. Details data on the use of Kubernetes, serverless computing and more

- Kubernetes open-source container-orchestation

- Extending Kubernetes

- Kubectl commands

- Client Libraries for Kubernetes

- Helm Kubernetes Tool

- Lens Kubernetes IDE

- Cluster Autoscaler Kubernetes Tool

- Kubernetes Special Interest Groups (SIGs). Kubernetes Community

- Kubernetes Troubleshooting

- Kubernetes Tutorials

- Kubernetes Patterns

- e-Books

- Kubernetes Operators

- Kubernetes Networking

- Kubernetes Sidecars

- Kubernetes Security

- Kubernetes Scheduling and Scheduling Profiles

- Kubernetes Storage

- Non-production Kubernetes Local Installers

- Kubernetes in Public Cloud

- On-Premise Production Kubernetes Cluster Installers

- Comparative Analysis of Kubernetes Deployment Tools

- Deploying Kubernetes Cluster with Kops

- Deploying Kubernetes Cluster with Kubeadm

- Deploying Kubernetes Cluster with Ansible

- kube-aws Kubernetes on AWS

- Kubespray

- Conjure up

- WKSctl

- Terraform (kubernetes the hard way)

- Caravan

- ClusterAPI

- Microk8s

- k8s-tew

- Kubernetes Distributions

- Cloud Development Kit (CDK) for Kubernetes

- SpringBoot with Docker

- Docker in Docker

- Serverless with OpenFaas and Knative

- Kubernetes interview questions

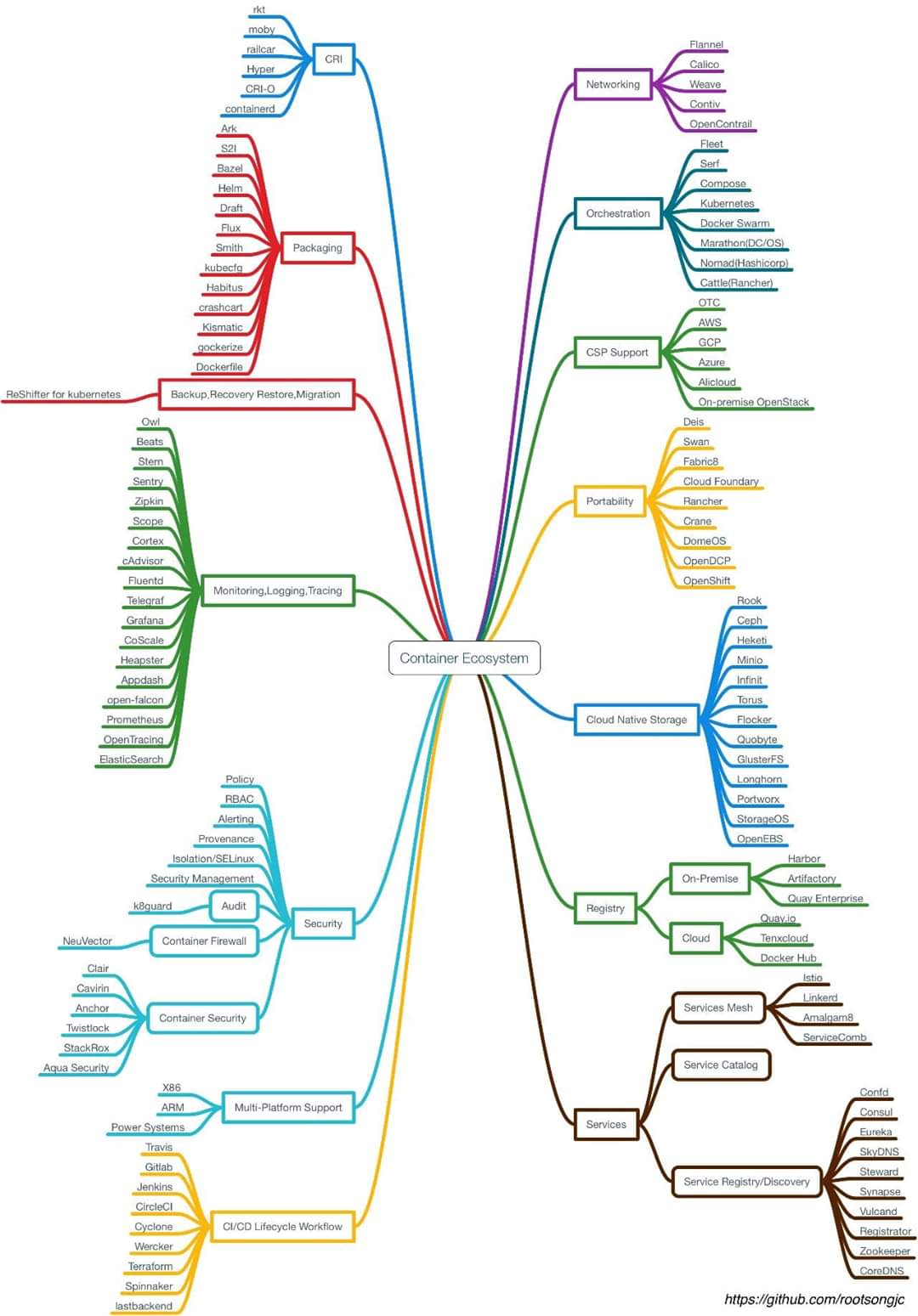

- Container Ecosystem

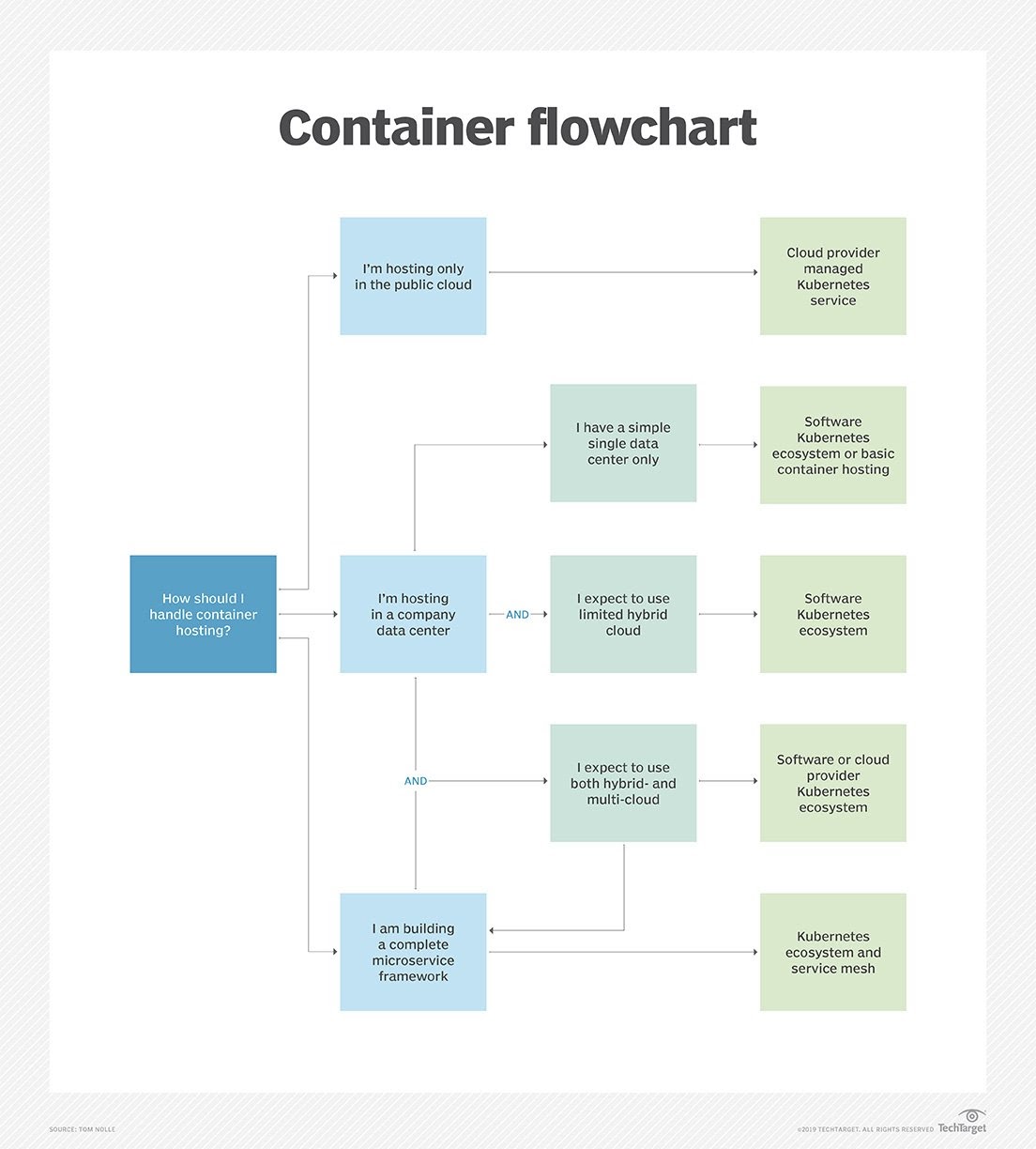

- Container Flowchart

- Videos

Certified Kubernetes Offerings¶

The State of Cloud-Native Development. Details data on the use of Kubernetes, serverless computing and more¶

Kubernetes open-source container-orchestation¶

- Wikipedia.org: Kubernetes

- kubernetes.io

- unofficial-kubernetes.readthedocs.io

- Awesome kubernetes 🌟

- https://www.reddit.com/r/kubernetes 🌟

- stackify.com: The Advantages of Using Kubernetes and Docker Together 🌟

- Ansible for devops: Kubernetes

- kubedex.com 🌟 Discover, Compare and Share Kubernetes Applications

- medium.com: The Kubernetes Scheduler: this series aims to advance the understanding of Kubernetes and its underlying concepts

- medium.com: A Year Of Running Kubernetes at MYOB, And The Importance Of Empathy

- blogs.mulesoft.com - K8s: 8 questions about Kubernetes

- labs.mwrinfosecurity.com: Attacking Kubernetes through Kubelet

- blog.doit-intl.com: Kubernetes and Secrets Management in the Cloud

- medium.com: Kubernetes Canary Deployment #1 Gitlab CI

- kubernetes-on-aws.readthedocs.io

- techbeacon.com: Why teams fail with Kubernetes—and what to do about it 🌟

- itnext.io: Kubernetes rolling updates, rollbacks and multi-environments 🌟

- learnk8s.io: Architecting Kubernetes clusters — choosing a worker node size 🌟

- youtube: Kubernetes 101: Get Better Uptime with K8s Health Checks

- learnk8s.io: Load balancing and scaling long-lived connections in Kubernetes 🌟

- itnext.io: Successful & Short Kubernetes Stories For DevOps Architects

- itnext.io: K8s Vertical Pod Autoscaling 🌟

- medium.com: kubernetes Pod Priority and Preemption

- returngis.net: Pruebas de vida de nuestros contenedores en Kubernetes

- itnext.io: K8s prevent queue worker Pod from being killed during deployment How to prevent a Kubernetes (like RabbitMQ) queue worker Pod from being killed during deployment while handling a message?

- youtube: deployment strategies in kubernetes | recreate | rolling update | blue/green | canary

- kodekloud.com: Kubernetes Features Every Beginner Must Know

- platform9.com: Kubernetes CI/CD Pipelines at Scale

- learnk8s.io: Architecting Kubernetes clusters — how many should you have? 🌟

- magalix.com: Capacity Planning 🌟 When we have multiple Pods with different Priority Class values, the admission controller starts by sorting Pods according to their priority. What happens when there are no nodes with available resources to schedule a high-priority pods?

- 4 trends for Kubernetes cloud-native teams to watch in 2020

- enterprisersproject.com: Kubernetes: Everything you need to know (2020) 🌟

- learnk8s.io: Provisioning cloud resources (AWS, GCP, Azure) in Kubernetes 🌟

- padok.fr: Kubernetes’ Architecture: Understanding the components and structure of clusters 🌟

- medium.com: Top 15 Online Courses to Learn Docker, Kubernetes, and AWS for Fullstack Developers and DevOps Engineers

- Allocatable memory and CPU in Kubernetes Nodes 🌟 Not all CPU and memory in your Kubernetes nodes can be used to run Pods. In this article, you will learn how managed Kubernetes Services such AKS, EKS and GKE reserve resources for workloads, operating systems, daemons and Kubernetes agent.

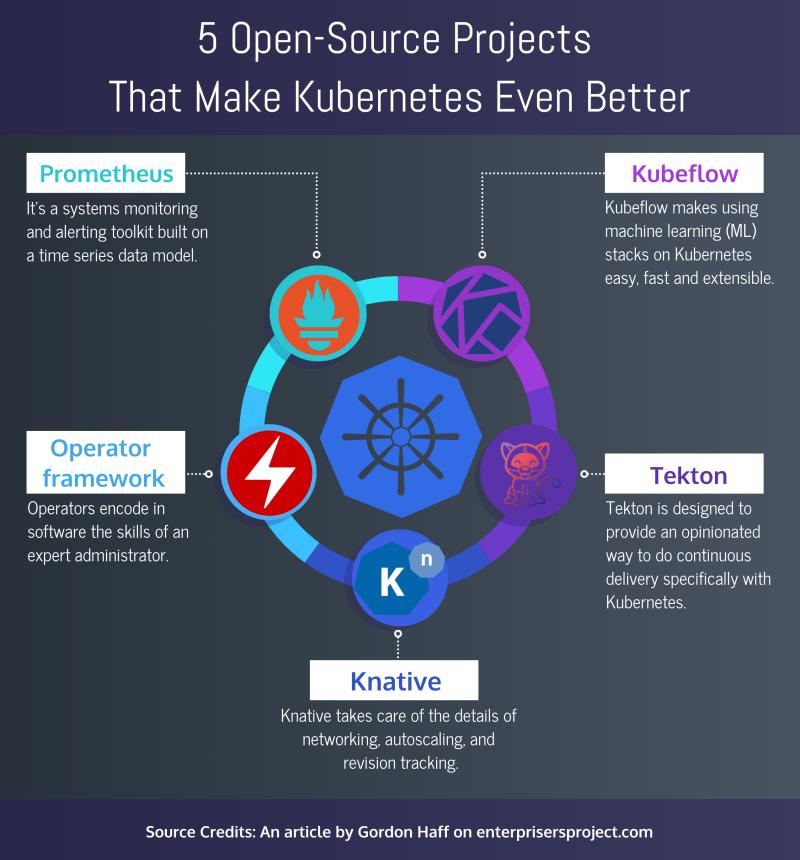

- 5 open source projects that make Kubernetes even better: Prometheus, Operator framework, Knative, Tekton, Kubeflow 🌟 Open source projects bring many additional capabilities to Kubernetes, such as performance monitoring, developer tools, serverless capabilities, and CI/CD workflows. Check out these five widely used options

- Optimize Kubernetes cluster management with these 5 tips 🌟 Effective Kubernetes cluster management requires operations teams to balance pod and node deployments with performance and availability needs.

- medium: How to Deploy a Web Application with Kubernetes 🌟 Learn how to create a Kubernetes cluster from scratch and deploy a web application (SPA+API) in two hours.

- blog.pipetail.io: 10 most common mistakes using kubernetes 🌟

Templating YAML in Kubernetes with real code. YQ YAML processor¶

- Templating YAML in Kubernetes with real code

- TL;DR: You should use tools such as yq and kustomize to template YAML resources instead of relying on tools that interpolate strings such as Helm.

- If you’re working on large scale projects, you should consider using real code — you can find hands-on examples on how to programmatically generate Kubernetes resources in Java, Go, Javascript, C# and Python in this repository.

Kubernetes Limits¶

- kubernetes.io Policy Limit Ranges

- sysdig.com: Understanding Kubernetes limits and requests by example 🌟

- dev.to/aurelievache: Understanding Kubernetes: part 22 – LimitRange

Kubernetes Knowledge Hubs¶

- k8sref.io 🌟 Kubernetes Reference

- Kubernetes Research. Research documents on node instance types, managed services, ingress controllers, CNIs, etc. 🌟 A research hub to collect all knowledge around Kubernetes. Those are in-depth reports and comparisons designed to drive your decisions. Should you use GKE, AKS, EKS? How many nodes? What instance type?

Extending Kubernetes¶

Adding Custom Resources. Extending Kubernetes API with Kubernetes Resource Definitions. CRD vs Aggregated API¶

- Custom Resources

- Use a custom resource (CRD or Aggregated API) if most of the following apply:

- You want to use Kubernetes client libraries and CLIs to create and update the new resource.

- You want top-level support from kubectl; for example, kubectl get my-object object-name.

- You want to build new automation that watches for updates on the new object, and then CRUD other objects, or vice versa.

- You want to write automation that handles updates to the object.

- You want to use Kubernetes API conventions like .spec, .status, and .metadata.

- You want the object to be an abstraction over a collection of controlled resources, or a summarization of other resources.

- Kubernetes provides two ways to add custom resources to your cluster:

- CRDs are simple and can be created without any programming.

- API Aggregation requires programming, but allows more control over API behaviors like how data is stored and conversion between API versions.

- Kubernetes provides these two options to meet the needs of different users, so that neither ease of use nor flexibility is compromised.

- Aggregated APIs are subordinate API servers that sit behind the primary API server, which acts as a proxy. This arrangement is called API Aggregation (AA). To users, it simply appears that the Kubernetes API is extended.

- CRDs allow users to create new types of resources without adding another API server. You do not need to understand API Aggregation to use CRDs.

- Regardless of how they are installed, the new resources are referred to as Custom Resources to distinguish them from built-in Kubernetes resources (like pods).

Crossplane, a Universal Control Plane API for Cloud Computing. Crossplane Workloads Definitions¶

- crossplane.io 🌟 Crossplane is an open source Kubernetes add-on that supercharges your Kubernetes clusters enabling you to provision and manage infrastructure, services, and applications from kubectl.

- Crossplane, a Universal Control Plane API for Cloud Computing

- Crossplane as an OpenShift Operator to manage and provision cloud-native services

Kubectl commands¶

Kubectl Cheat Sheets¶

List all resources and sub resources that you can constrain with RBAC¶

- kind of a handy way to see all thing things you can affect with Kubernetes RBAC. This will list all resources and sub resources that you can constrain with RBAC. If you want to see just subresources append “| grep {name}/”:

kubectl get --raw /openapi/v2 | jq '.paths | keys[]'

Copy a configMap in kubernetes between namespaces¶

- Copy a configMap in kubernetes between namespaces with deprecated “–export” flag:

kubectl get configmap --namespace=<source> <configmap> --export -o yaml | sed "s/<source>/<dest>/" | kubectl apply --namespace=<dest> -f -

- Flag export deprecated in kubernetes 1.14. Instead following command can be used:

kubectl get configmap <configmap-name> --namespace=<source-namespace> -o yaml | sed ‘s/namespace: <from-namespace>/namespace: <to-namespace>/’ | kubectl create -f

Copy secrets in kubernetes between namespaces¶

kubectl get secret <secret-name> --namespace=<source> -o yaml | sed ‘s/namespace: <from-namespace>/namespace: <to-namespace>/’ | kubectl create -f

Export resources with kubectl and python¶

- Export resources with zoidbergwill/export.sh, by zoidbergwill

Kubectl Alternatives¶

Manage Kubernetes (K8s) objects with Ansible Kubernetes Module¶

Jenkins Kubernetes Plugins¶

Client Libraries for Kubernetes¶

Go Client for Kubernetes¶

- Go client for Kubernetes Go clients for talking to a kubernetes cluster.

Fabric8 Java Client for Kubernetes¶

- Fabric8 has been available as a Java client for Kubernetes since 2015, and today is one of the most popular client libraries for Kubernetes (the most popular is client-go, which is the client library for the Go programming language on Kubernetes). In recent years, fabric8 has evolved from a Java client for the Kubernetes REST API to a full-fledged alternative to the kubectl command-line tool for Java-based development.

- developers.redhat.com: Getting started with the fabric8 Kubernetes Java client

- developers.redhat.com: How the fabric8 Maven plug-in deploys Java applications to OpenShift

- Fabric8.io Microservices Development Platform It is an open source microservices platform based on Docker, Kubernetes and Jenkins. It is built by the Red Hat guys.The purpose of the project is to make it easy to create microservices, build, test and deploy them via Continuous Delivery pipelines then run and manage them with Continuous Improvement and ChatOps. Fabric8 installs and configures the following things for you automatically: Jenkins, Gogs, Fabric8 registry, Nexus, SonarQube.

Helm Kubernetes Tool¶

- helm.sh

- GitHub: Helm, the Kubernetes Package Manager Installing and managing Kubernetes applications

- Helm and Kubernetes Tutorial - Introduction

- Delve into Helm: Advanced DevOps

- Continuously delivering apps to Kubernetes using Helm

- Zero to Kubernetes CI/CD in 5 minutes with Jenkins and Helm

- DevOps with Azure, Kubernetes, and Helm

- dzone: the art of the helm chart patterns

- dzone: 15 useful helm chart tools

- dzone: create install upgrade and rollback a helm chart - part 1

- dzone: create install upgrade and rollback a helm chart - part 2

- dzone: cicd with kubernetes and helm

- dzone: do you need helm?

- dzone: managing helm releases the gitops way

- codefresh.io: Using Helm 3 with Helm 2 charts

- Helm Charts:

- Jenkins

- Codecentric Jenkins 🌟 Helm 3 compliant (Simpler and more secure than helm 2)

- Nexus3

- Choerodon Nexus3 🌟 Helm 3 compliant (Simpler and more secure than helm 2)

- Sonar

- Selenium

- Jmeter

- bitnami: create your first helm chart

- Helm Charts repositories:

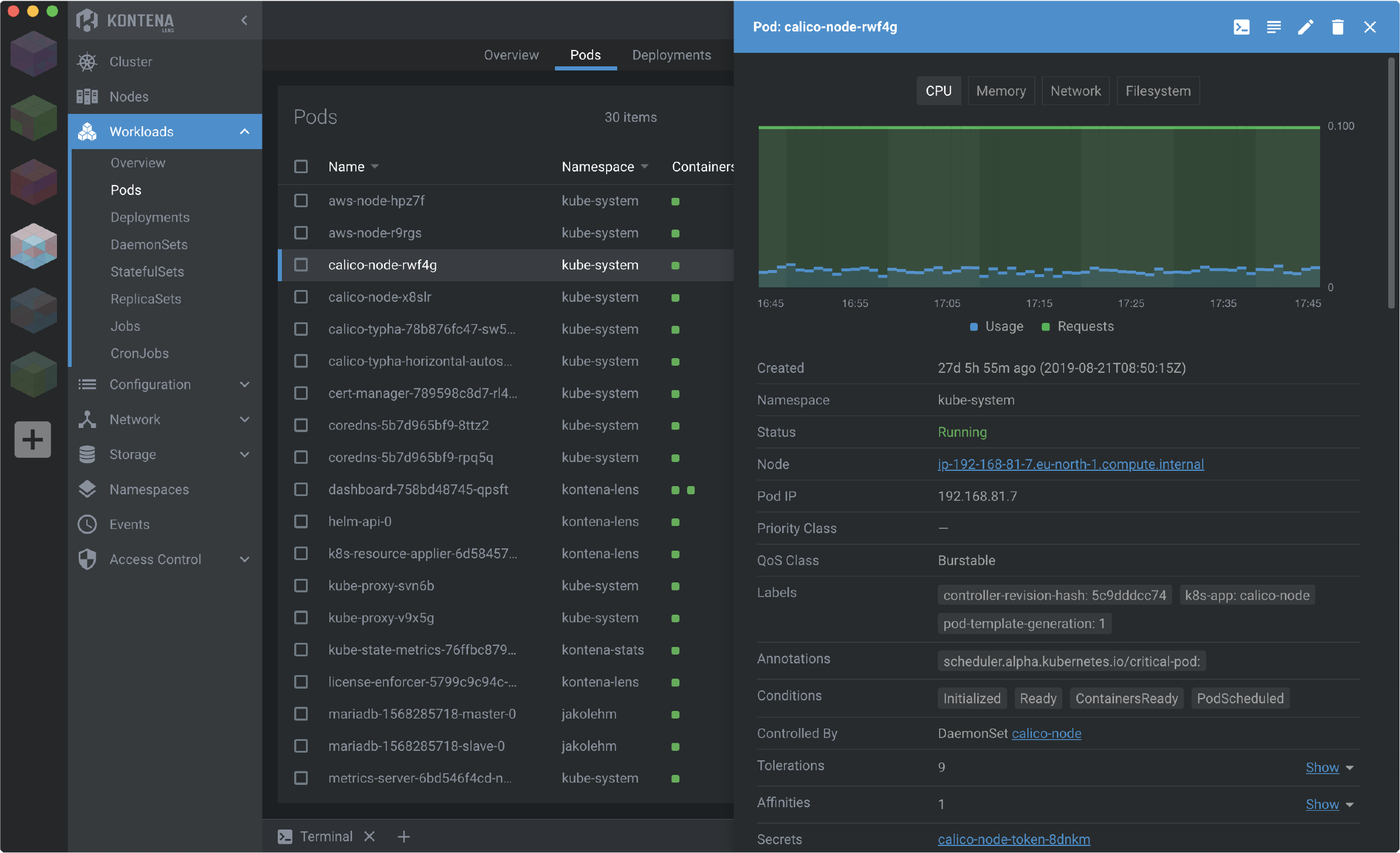

Lens Kubernetes IDE¶

- Lens Kubernetes IDE 🌟 Lens is the only IDE you’ll ever need to take control of your Kubernetes clusters. It’s open source and free. Download it today!

Cluster Autoscaler Kubernetes Tool¶

- kubernetes.io: Cluster Management - Resizing a cluster

- github.com/kubernetes: Kubernetes Cluster Autoscaler

- Kubernetes Autoscaling in Production: Best Practices for Cluster Autoscaler, HPA and VPA In this article we will take a deep dive into Kubernetes autoscaling tools including the cluster autoscaler, the horizontal pod autoscaler and the vertical pod autoscaler. We will also identify best practices that developers, DevOps and Kubernetes administrators should follow when configuring these tools.

- gitconnected.com: Kubernetes Autoscaling 101: Cluster Autoscaler, Horizontal Pod Autoscaler, and Vertical Pod Autoscaler

- packet.com: Kubernetes Cluster Autoscaler

- itnext.io: Kubernetes Cluster Autoscaler: More than scaling out

- cloud.ibm.com: Containers Troubleshoot Cluster Autoscaler

- platform9.com: Kubernetes Autoscaling Options: Horizontal Pod Autoscaler, Vertical Pod Autoscaler and Cluster Autoscaler

- banzaicloud.com: Autoscaling Kubernetes clusters

- tech.deliveryhero.com: Dynamically overscaling a Kubernetes cluster with cluster-autoscaler and Pod Priority

HPA and VPA¶

Cluster Autoscaler and Helm¶

- hub.helm.sh: cluster-autoscaler The cluster autoscaler scales worker nodes within an AWS autoscaling group (ASG) or Spotinst Elastigroup.

Cluster Autoscaler and DockerHub¶

Cluster Autoscaler in GKE, EKS, AKS and DOKS¶

- Amazon Web Services: EKS Cluster Autoscaler

- Azure: AKS Cluster Autoscaler

- Google Cloud Platform: GKE Cluster Autoscaler

- DigitalOcean Kubernetes: DOKS Cluster Autoscaler

Cluster Autoscaler in OpenShift¶

- OpenShift 3.11: Configuring the cluster auto-scaler in AWS

- OpenShift 4.4: Applying autoscaling to an OpenShift Container Platform cluster

Kubernetes Special Interest Groups (SIGs). Kubernetes Community¶

- Kubernetes Special Interest Groups (SIGs) have been around to support the community of developers and operators since around the 1.0 release. People organized around networking, storage, scaling and other operational areas.

- SIG Apps: build apps for and operate them in Kubernetes

- Kubernetes SIGs 🌟

Kubectl Plugins¶

- Available kubectl plugins 🌟

- Extend kubectl with plugins

- youtube: Welcome to the world of kubectl plugins

Kubectl Plugins and Tools¶

- ramitsurana/awesome-kubernetes: Tools 🌟

- VMware octant A web-based, highly extensible platform for developers to better understand the complexity of Kubernetes clusters.

- octant.dev Visualize your Kubernetes workloads. Octant is an open source developer-centric web interface for Kubernetes that lets you inspect a Kubernetes cluster and its applications.

- KSS - Kubernetes pod status on steroid

- kubectl-debug

- kubectl-tree kubectl plugin to browse Kubernetes object hierarchies as a tree

- The Golden Kubernetes Tooling and Helpers list

- kubech (kubectl change) Set kubectl contexts/namespaces per shell/terminal to manage multi Kubernetes cluster at the same time.

- Kubecle is a web ui running locally that provides useful information about your kubernetes clusters. It is an alternative to Kubernetes Dashboard. Because it runs locally, you can access any kubernetes clusters you have access to

- Permission Manager is a project that brings sanity to Kubernetes RBAC and Users management, Web UI FTW

- developer.sh: Kubernetes client tools overview

- kubectx Faster way to switch between clusters and namespaces in kubectl

- go-kubectx 5x-10x faster alternative to kubectx. Uses client-go.

- kubevious is open-source software that provides a usable and highly graphical interface for Kubernetes. Kubevious renders all configurations relevant to the application in one place.

- Guard is a Kubernetes Webhook Authentication server. Using guard, you can log into your Kubernetes cluster using various auth providers. Guard also configures groups of authenticated user appropriately.

- itnext.io: arkade by example — Kubernetes apps, the easy way 🌟

- Kubei is a flexible Kubernetes runtime scanner, scanning images of worker and Kubernetes nodes providing accurate vulnerabilities assessment.

- Tubectl: a kubectl alternative which adds a bit of magic to your everyday kubectl routines by reducing the complexity of working with contexts, namespaces and intelligent matching resources.

- Kpt: Packaging up your Kubernetes configuration with git and YAML since 2014 (Google)

- Krew 🌟 is the plugin manager for kubectl command-line tool.

- kubernetes-common-services These services help make it easier to manage your applications environment in Kubernetes

- k8s-job-notify Kubernetes Job/CronJob Notifier. This tool sends an alert to slack whenever there is a Kubernetes cronJob/Job failure/success.

- kube-opex-analytics 🌟 Kubernetes Cost Allocation and Capacity Planning Analytics Tool. Built-in hourly, daily, monthly reports - Prometheus exporter - Grafana dashboard.

- kubeletctl is a command line tool that implement kubelet’s API. Part of kubelet’s API is documented but most of it is not. This tool covers all the documented and undocumented APIs. The full list of all kubelet’s API can be view through the tool or this API table. What can it do ?:

- Run any kubelet API call

- Scan for nodes with opened kubelet API

- Scan for containers with RCE

- Run a command on all the available containers by kubelet at the same time

- Get service account tokens from all available containers by kubelet

- Nice printing :)

- inspektor-gadget Collection of gadgets for debugging and introspecting Kubernetes applications using BPF kinvolk.io

- K8bit — the tiny Kubernetes dashboard 🌟 K8bit is a tiny dashboard that is meant to demonstrate how to use the Kubernetes API to watch for changes.

- KUbernetes Test TooL (kuttl) 🌟

- Portfall: A desktop k8s port-forwarding portal for easy access to all your cluster UIs 🌟

- k8s-dt-node-labeller is a Kubernetes controller for labelling a node with devicetree properties (devicetree is a data structure for describing hardware).

- kube-backup: Kubernetes resource state sync to git

- kubedev 🌟 is a Kubernetes Dashboard that helps developers in their everyday usage

- Kubectl SSH Proxy 🌟 Kubectl plugin to launch a ssh socks proxy and use it. This plugin aims to make your life easier when using kubectl a cluster that’s behind a SSH bastion.

Kubernetes Troubleshooting¶

- Kubernetes troubleshooting diagram 🌟

- Understanding Kubernetes cluster events 🌟

- nigelpoulton.com: Troubleshooting kubernetes service discovery - Part 1

- medium: 5 tips for troubleshooting apps on Kubernetes

- managedkube.com: Troubleshooting a Kubernetes ingress

Kubernetes Tutorials¶

- katacoda.com 🌟 Interactive Learning and Training Platform for Software Engineers

- kubernetesbyexample.com 🌟

- Play with Kubernetes 🌟 A simple, interactive and fun playground to learn Kubernetes

- devopscube.com: Kubernetes Tutorials For Beginners: Getting Started Guide 🌟

- Intoduction to Kubernetes (slides, beginners and advanced) 🌟

- medium.com: Kubernetes 101: Pods, Nodes, Containers, and Clusters

- medium.com: Learn Kubernetes in Under 3 Hours: A Detailed Guide to Orchestrating Containers

- kubernetestutorials.com: Install and Deploy Kubernetes on CentOs 7

- medium.com: Simplifying orchestration with Kubernetes

- aquasec.com: 70 Best Kubernetes Tutorials 🌟 Valuable Kubernetes tutorials from multiple sources, classified into the following categories: Kubernetes AWS and Azure tutorials, networking tutorials, clustering and federation tutorials and more.

- cloud.google.com: kubernetes comic 🌟 Learn about kubernetes and how you can use it for continuous integration and delivery.

- magalix.com: Kubernetes 101 - Concepts and Why It Matters

- Google Play: Learning Solution - Learn Kubernetes 🌟

- Google Play: TomApp - Learn Kubernetes

- Dzone refcard: Getting Started with Kubernetes

- udemy.com: Learn DevOps: The Complete Kubernetes Course 🌟

- udemy.com: Learn DevOps: Advanced Kubernetes Usage 🌟

- wardviaene/kubernetes-course 🌟

- wardviaene/advanced-kubernetes-course 🌟

- dzone: The complete kubernetes collection tutorials and tools 🌟

- dzone: kubernetes in 10 minutes a complete guide to look

- magalix.com: The Best Kubernetes Tutorials 🌟

- 35 Advanced Tutorials to Learn Kubernetes 🌟

Famous Kubernetes resources of 2019¶

- Kubernetes for developers

- Kubernetes for the Absolute Beginners

- Kubernetes: Getting Started (Free)

- Kubernetes Tutorial: Learn the Basics

- Complete Kubernetes Course

- Getting started with Kubernetes

Famous Kubernetes resources of 2020¶

- javarevisited.blogspot.com: Top 5 courses to Learn Docker and Kubernetes in 2020 - Best of Lot

- skillslane.com: 10 Best Kubernetes Courses [2020]: Beginner to Advanced Courses

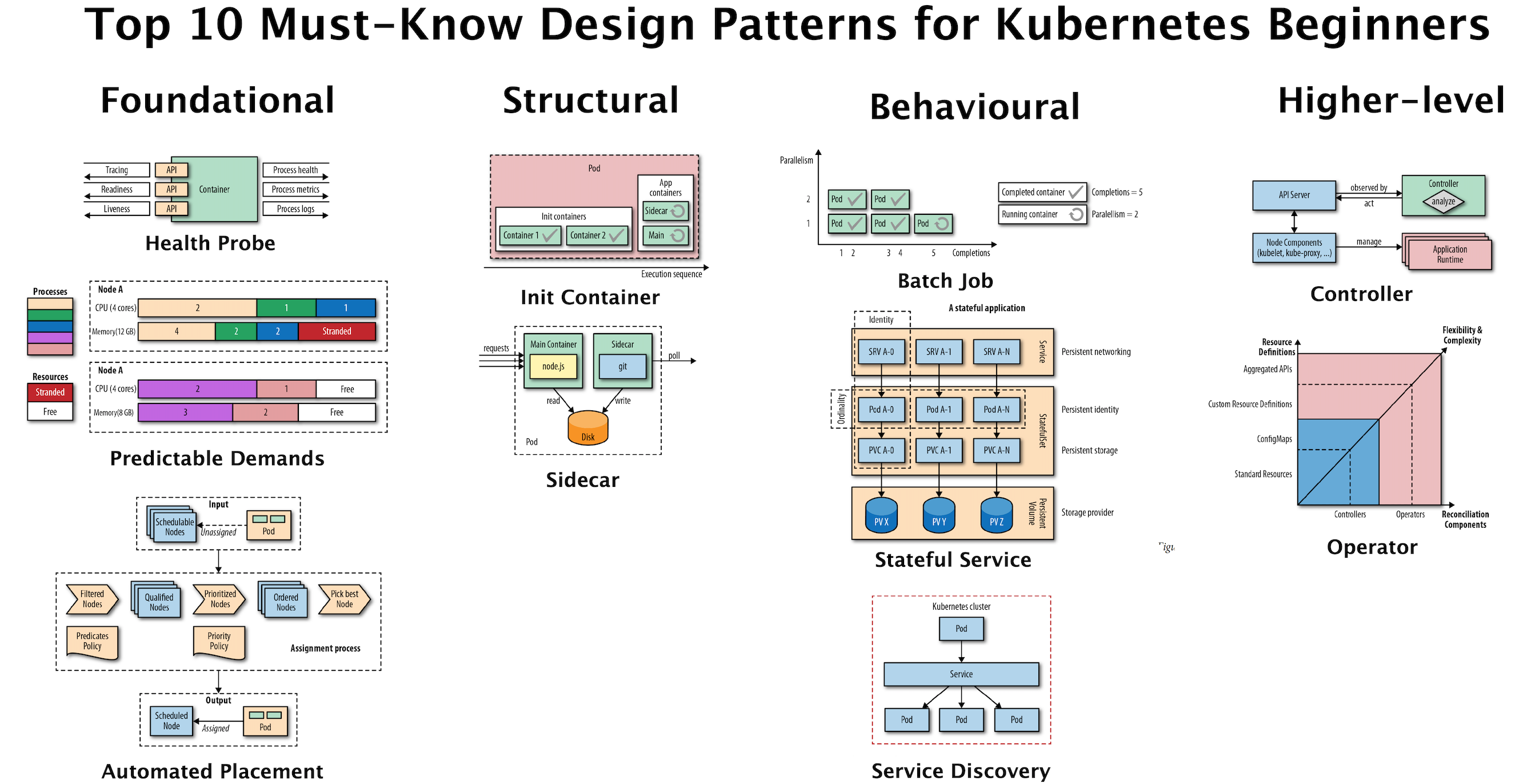

Kubernetes Patterns¶

- github.com/k8spatterns/examples 🌟 Examples for “Kubernetes Patterns - Reusable Elements for Designing Cloud-Native Applications”

- kubernetes.io: container design patterns

- magalix.com: Kubernetes Patterns - The Service Discovery Pattern 🌟

- gardener.cloud: Kubernetes Antipatterns

- dzone.com: Performance Patterns in Microservices-Based Integrations 🌟

- developers.redhat.com: Top 10 must-know Kubernetes design patterns

e-Books¶

Famous Kubernetes resources of 2019¶

Kubernetes Patterns eBooks¶

- k8spatterns.io: Free Kubernetes Patterns e-book 🌟 , ref

- magalix.com: Free Kubernetes Application Architecture Patterns eBook 🌟

Kubernetes Operators¶

- kruschecompany.com: What is a Kubernetes Operator and Where it Can be Used?

- kruschecompany.com: Prometheus Operator – Installing Prometheus Monitoring Within The Kubernetes Environment

- redhat.com: Kubernetes operators - Embedding operational expertise side by side with containerized applications

- hashicorp.com: Creating Workspaces with the HashiCorp Terraform Operator for Kubernetes

- banzaicloud.com: Kafka rolling upgrade made easy with Supertubes

- devops.com: Day 2 for the Operator Ecosystem 🌟

- KUDO: The Kubernetes Universal Declarative Operator 🌟 KUDO is a toolkit that makes it easy to build Kubernetes Operators, in most cases just using YAML.

- itnext.io: Operator Lifecycle Manager (OLM) 🌟

Flux. The GitOps Operator for Kubernetes¶

- Flux 🌟 The GitOps operator for Kubernetes

- docs.fluxcd.io

- github: Flux CD

- dzone: Developing Applications on Multi-tenant Clusters With Flux and Kustomize Take a look at how multiple teams can use the resources of a single cluster to develop an application.

Writing Kubernetes Operators¶

- Kubernetes.io: Operator pattern

- opensource.com: Build a Kubernetes Operator in 10 minutes with Operator SDK

- magalix.com: Creating Custom Kubernetes Operators

- medium.com: Writing Your First Kubernetes Operator

- bmc.com: What Is a Kubernetes Operator?

Kubernetes Networking¶

- dzone: how to setup kubernetes networking

- AWS and Kubernetes Networking Options and Trade-Offs (part 1)

- AWS and Kubernetes Networking Options and Trade-Offs (part 2)

- AWS and Kubernetes Networking Options and Trade-Offs (part 3)

- ovh.com - getting external traffic into kubernetes: clusterip, nodeport, loadbalancer and ingress

- youtube: Kubernetes Ingress Explained Completely For Beginners

- stackrox.com: Kubernetes Networking Demystified: A Brief Guide

- medium.com: Fighting Service Latency in Microservices With Kubernetes

- medium.com: Kubernetes NodePort vs LoadBalancer vs Ingress? When should I use what? 🌟

- blog.alexellis.io: Get a LoadBalancer for your private Kubernetes cluster

- dustinspecker.com: How Do Kubernetes and Docker Create IP Addresses?!

Xposer Kubernetes Controller To Manage Ingresses¶

- Xposer 🌟 A Kubernetes controller to manage (create/update/delete) Kubernetes Ingresses based on the Service

- Problem: We would like to watch for services running in our cluster; and create Ingresses and generate TLS certificates automatically (optional)

- Solution: Xposer can watch for all the services running in our cluster; Creates, Updates, Deletes Ingresses and uses certmanager to generate TLS certificates automatically based on some annotations.

CNI Container Networking Interface¶

- Kubernetes.io: Network Plugins

- rancher.com: Container Network Interface (CNI) Providers

- github.com/containernetworking 🌟

- dzone: How to Understand and Set Up Kubernetes Networking Take a look at this tutorial that goes through and explains the inner workings of Kubernetes networking, including working with multiple networks.

- medium: Container Networking Interface aka CNI

Project Calico¶

- Project Calico 🌟 Secure networking for the cloud native era

Kubernetes Sidecars¶

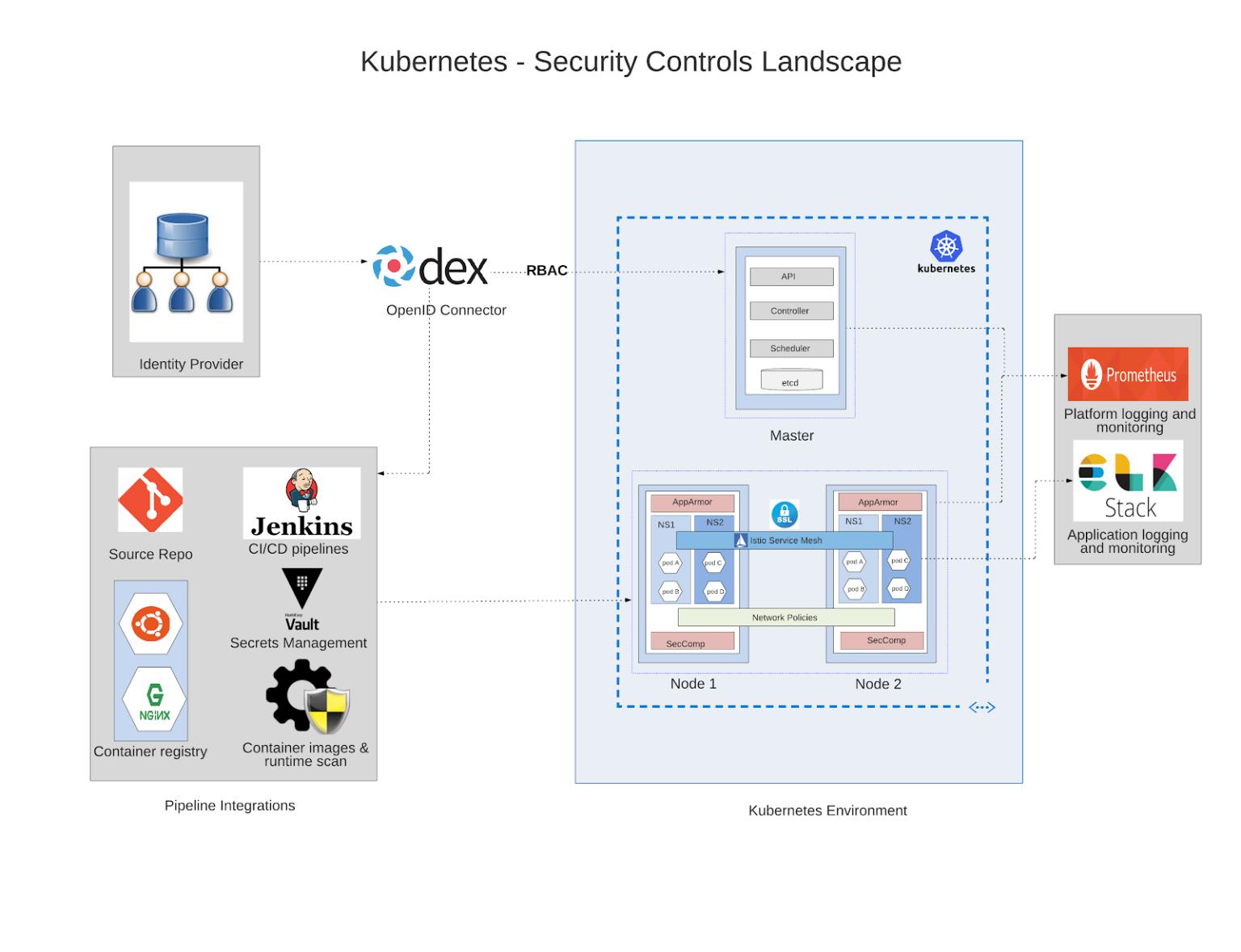

Kubernetes Security¶

- cilium.io

- Dzone - devops security at scale

- Dzone - Kubernetes Policy Management with Kyverno

- Dzone - OAuth 2.0

- Kubernetes Security Best Practices 🌟

- Kubernetes Certs

- jeffgeerling.com: Everyone might be a cluster-admin in your Kubernetes cluster

- rancher.com: Enhancing Kubernetes Security with Pod Security Policies, Part 1

- Microsoft.com: Attack matrix for Kubernetes 🌟

- codeburst.io: 7 Kubernetes Security Best Practices You Must Follow

Security Best Practices Across Build, Deploy, and Runtime Phases¶

- Kubernetes Security 101: Risks and 29 Best Practices 🌟

- Build Phase:

- Use minimal base images

- Don’t add unnecessary components

- Use up-to-date images only

- Use an image scanner to identify known vulnerabilities

- Integrate security into your CI/CD pipeline

- Label non-fixable vulnerabilities

- Deploy Phase:

- Use namespaces to isolate sensitive workloads

- Use Kubernetes network policies to control traffic between pods and clusters

- Prevent overly permissive access to secrets

- Assess the privileges used by containers

- Assess image provenance, including registries

- Extend your image scanning to deploy phase

- Use labels and annotations appropriately

- Enable Kubernetes role-based access control (RBAC)

- Runtime Phase:

- Leverage contextual information in Kubernetes

- Extend vulnerability scanning to running deployments

- Use Kubernetes built-in controls when available to tighten security

- Monitor network traffic to limit unnecessary or insecure communication

- Leverage process whitelisting

- Compare and analyze different runtime activity in pods of the same deployments

- If breached, scale suspicious pods to zero

Kubernetes Authentication and Authorization¶

- kubernetes.io: Authenticating

- kubernetes.io: Access Clusters Using the Kubernetes API

- kubernetes.io: Accesing Clusters

- magalix.com: kubernetes authentication 🌟

- magalix.com: kubernetes authorization 🌟

- kubernetes login

Kubernetes Authentication Methods¶

Kubernetes supports several authentication methods out-of-the-box, such as X.509 client certificates, static HTTP bearer tokens, and OpenID Connect.

X.509 client certificates¶

Static HTTP Bearer Tokens¶

- kubernetes.io: Access Clusters Using the Kubernetes API

- stackoverflow: Accessing the Kubernetes REST end points using bearer token

OpenID Connect¶

Implementing a custom Kubernetes authentication method¶

Pod Security Policies (SCCs - Security Context Constraints in OpenShift)¶

EKS Security¶

Kubernetes Scheduling and Scheduling Profiles¶

Assigning Pods to Nodes. Pod Affinity and Anti-Affinity¶

Pod Topology Spread Constraints and PodTopologySpread Scheduling Plugin¶

Kubernetes Storage¶

Kubernetes Volumes Guide¶

- Filesystem vs Volume vs Persistent Volume 🌟

- This is a guide that covers:

- How to set up and use volumes in Kubernetes

- What are persistent volumes, and how to use them

- How to use an NFS volume

- Shared data and volumes between pods

ReadWriteMany PersistentVolumeClaims¶

- Create ReadWriteMany PersistentVolumeClaims on your Kubernetes Cluster 🌟 Kubernetes allows us to provision our PersistentVolumes dynamically using PersistentVolumeClaims. Pods treat these claims as volumes. The access mode of the PVC determines how many nodes can establish a connection to it. We can refer to the resource provider’s docs for their supported access modes.

- Digital Ocean: Kuberntes PVC ReadWriteMany access mode alternative

Non-production Kubernetes Local Installers¶

- Minikube A tool that makes it easy to run Kubernetes locally inside a Linux VM. It’s aimed on users who want to just test it out or use it for development. It cannot spin up a production cluster, it’s a one node machine with no high availability.

- kind Kubernetes IN Docker - local clusters for testing Kubernetes

- store.docker.com: Docker Community Edition EDGE with kubernetes. Installing Kubernetes using the Docker Client Currently only available in Edge edition.

- medium.com: Local Kubernetes for Linux — MiniKube vs MicroK8s

- itnext.io: Run Kubernetes On Your Machine Several options to start playing with K8s in no time

Kubernetes in Public Cloud¶

GKE vs EKS vs AKS¶

- medium.com: Kubernetes Cloud Services: Comparing GKE, EKS and AKS

- stackrox.com: EKS vs GKE vs AKS - Evaluating Kubernetes in the Cloud

- youtube: Kubernetes Comparison A beautiful comparison of Kubernetes Services from GCP, AWS and Azure by learnk8s.

AWS EKS (Hosted/Managed Kubernetes on AWS)¶

- dzone: kops vs EKS

- udemy.com: amazon eks starter kubernetes on aws

- eksctl: EKS installer

- medium: Implementing Kubernetes Cluster using AWS EKS (AWS Managed Kubernetes)

- Amazon EKS Security Best Practices

- thenewstack.io: Install and Configure OpenEBS on Amazon Elastic Kubernetes Service

- cloudonaut.io: Scaling Container Clusters on AWS: ECS and EKS 🌟

- magalix.com: Deploying Kubernetes Cluster With EKS 🌟 Fargate Deployment vs. Linux Workload

Tools for multi-cloud Kubernetes management¶

Compare tools for multi-cloud Kubernetes management 🌟

On-Premise Production Kubernetes Cluster Installers¶

Comparative Analysis of Kubernetes Deployment Tools¶

- A Comparative Analysis of Kubernetes Deployment Tools: Kubespray, kops, and conjure-up

- wecloudpro.com: Deploy HA kubernetes cluster in AWS in less than 5 minutes

Deploying Kubernetes Cluster with Kops¶

- GitHub: Kubernetes Cluster with Kops

- Kubernetes.io: Installing Kubernetes with kops

- Minikube and docker client are great for local setups, but not for real clusters. Kops and kubeadm are tools to spin up a production cluster. You don’t need both tools, just one of them.

- On AWS, the best tool is kops. Since AWS EKS (hosted kubernetes) is currently available, this is the preferred option (you don’t need to maintain the masters).

- For other installs, or if you can’t get kops to work, you can use kubeadm.

- Setup kops in your windows with virtualbox.org and vagrantup.com . Once downloaded, to type a new linux VM, just spin up ubuntu via vagrant in cmd/powershell and run kops installer:

C:\ubuntu> vagrant init ubuntu/xenial64

C:\ubuntu> vagrant up

C:\ubuntu> vagrant ssh-config

C:\ubuntu> vagrant ssh

$ curl -LO https://github.com/kubernetes/kops/releases/download/$(curl -s https://api.github.com/repos/kubernetes/kops/releases/latest | grep tag_name | cut -d '"' -f 4)/kops-linux-amd64

$ chmod +x kops-linux-amd64

$ sudo mv kops-linux-amd64 /usr/local/bin/kops

Deploying Kubernetes Cluster with Kubeadm¶

- Kubernetes Cluster with Kubeadm It works on any deb / rpm compatible Linux OS, for example Ubuntu, Debian, RedHat or CentOS. This is the main advantage of kubeadm. The tool itself is still in beta (Q1 2018), but is expected to become stable somewhere this year. It’s very easy to use and lets you spin kubernetes cluster in just a couple of minutes.

- medium.com: Demystifying High Availability in Kubernetes Using Kubeadm

- Setting Up a Kubernetes Cluster on Ubuntu 18.04

Deploying Kubernetes Cluster with Ansible¶

kube-aws Kubernetes on AWS¶

- Kubernetes on AWS (kube-aws) A command-line tool to declaratively manage Kubernetes clusters on AWS

Kubespray¶

Conjure up¶

WKSctl¶

- Weave Kubernetes System Control - wksctl Open Source Weaveworks Kubernetes System

- WKSctl - A New OSS Kubernetes Manager using GitOps

- WKSctl: a Tool for Kubernetes Cluster Management Using GitOps

Terraform (kubernetes the hard way)¶

- Kelsey Hightower: kubernetes the hard way

- napo.io: Kubernetes The (real) Hard Way on AWS

- napo.io: Terraform Kubernetes Multi-Cloud (ACK, AKS, DOK, EKS, GKE, OKE)

Caravan¶

ClusterAPI¶

Microk8s¶

k8s-tew¶

- k8s-tew Kubernetes is a fairly complex project. For a newbie it is hard to understand and also to use. While Kelsey Hightower’s Kubernetes The Hard Way, on which this project is based, helps a lot to understand Kubernetes, it is optimized for the use with Google Cloud Platform.

Kubernetes Distributions¶

Red Hat OpenShift¶

- Openshift Container Platform

- OKD The Community Distribution of Kubernetes that powers Red Hat OpenShift

Weave Kubernetes Platform¶

- weave.works: Weave Kubernetes Platform Automate Enterprise Kubernetes the GitOps way

- github: Weave Net - Weaving Containers into Applications

Ubuntu Charmed Kubernetes¶

VMware Kubernetes Tanzu and Project Pacific¶

- blogs.vmware.com: Introducing Project Pacific (vSphere with Kubernetes)

- VMware vSphere 7 with Kubernetes - Project Pacific

- VMware Kubernetes Tanzu

- cormachogan.com: A first look at vSphere with Kubernetes in action

- cormachogan.com: Building a TKG Cluster in vSphere with Kubernetes

- blogs.vmware.com: VMware Tanzu Service Mesh, built on VMware NSX is Now Available!

Rancher: Enterprise management for Kubernetes¶

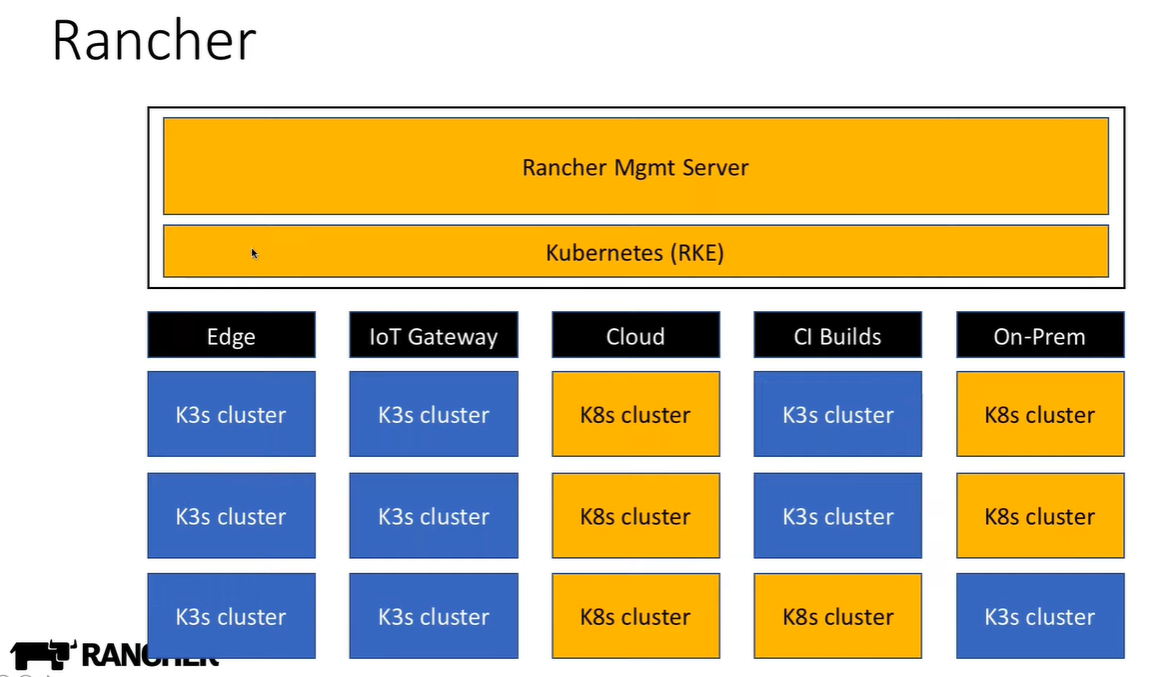

- rancher.com Rancher is enterprise management for Kubernetes, an amazing GUI for managing and installing Kubernetes clusters. They have released a number of pieces of software that are part of this ecosystem, for example Longhorn which is a lightweight and reliable distributed block storage system for Kubernetes.

- rancher.com: Custom alerts using Prometheus queries

- zdnet.com: Rancher Labs closes $40M funding round to “run Kubernetes everywhere” The six year-old startup is going after new markets that want to run Kubernetes clusters at the edge.

Rancher 2¶

Rancher 2 RKE¶

- Rancher 2 RKE Rancher 2 that runs in docker containers. RKE is a CNCF-certified Kubernetes distribution that runs entirely within Docker containers. It solves the common frustration of installation complexity with Kubernetes by removing most host dependencies and presenting a stable path for deployment, upgrades, and rollbacks.

K3S¶

- k3s Basic kubernetes with automated installer. Lightweight Kubernetes Distribution.

- K8s vs k3s “K3s is designed to be a single binary of less than 40MB that completely implements the Kubernetes API. In order to achieve this, they removed a lot of extra drivers that didn’t need to be part of the core and are easily replaced with add-ons. K3s is a fully CNCF (Cloud Native Computing Foundation) certified Kubernetes offering. This means that you can write your YAML to operate against a regular “full-fat” Kubernetes and they’ll also apply against a k3s cluster. Due to its low resource requirements, it’s possible to run a cluster on anything from 512MB of RAM machines upwards. This means that we can allow pods to run on the master, as well as nodes. And of course, because it’s a tiny binary, it means we can install it in a fraction of the time it takes to launch a regular Kubernetes cluster! We generally achieve sub-two minutes to launch a k3s cluster with a handful of nodes, meaning you can be deploying apps to learn/test at the drop of a hat.”

- k3sup (said ‘ketchup’) is a light-weight utility to get from zero to KUBECONFIG with k3s on any local or remote VM. All you need is ssh access and the k3sup binary to get kubectl access immediately.

- Install Kubernetes with k3sup and k3s

K3S Use Cases¶

- K3S Use Cases:

- Edge computing and Embedded Systems

- IOT Gateway

- CI environments (i.e. Jenkins with Configuration as Code)

- Single-App Clusters

K3S in Public Clouds¶

K3D¶

- k3d k3s that runs in docker containers.

K3OS¶

- k3OS k3OS is a Linux distribution designed to remove as much OS maintenance as possible in a Kubernetes cluster. It is specifically designed to only have what is needed to run k3s. Additionally the OS is designed to be managed by kubectl once a cluster is bootstrapped. Nodes only need to join a cluster and then all aspects of the OS can be managed from Kubernetes. Both k3OS and k3s upgrades are handled by the k3OS operator.

- K3OS Value Add:

- Supports multiple architectures

- K3OS runs on x86 and ARM processors to give you maximum flexibility.

- Runs only the minimum required services

- Fewer services means a tiny attack surface, for greater security.

- Doesn’t require a package manager

- The required services are built into the distribution image.

- Models infrastructure as code

- Manage system configuration with version control systems.

- Supports multiple architectures

K3C¶

- K3C Lightweight local container engine for container development. K3C is a local container engine designed to fill the same gap Docker does in the Kubernetes ecosystem. Specifically k3c focuses on developing and running local containers, basically docker run/build. Currently k3s, the lightweight Kubernetes distribution, provides a great solution for Kubernetes from dev to production. While k3s satisifies the Kubernetes runtime needs, one still needs to run docker (or a docker-like tool) to actually develop and build the container images. k3c is intended to replace docker for just the functionality needed for the Kubernetes ecosystem.

Hosted Rancher¶

Rancher on Microsoft Azure¶

Rancher RKE on vSphere¶

Rancher Kubernetes on Oracle Cloud¶

- medium.com: OKE Clusters from Rancher 2.0 Part one of a series of articles on creating, monitoring, and managing Kubernetes clusters on OCI using Rancher.

- medium.com: Rancher deployed Kubernetes on Oracle Cloud Infrastructure Part two of a multi-part series on creating, monitoring, and managing Kubernetes clusters (hosted and non-hosted) on OCI.

Rancher Software Defined Storage with Longhorn¶

Rancher Fleet to manage multiple kubernetes clusters¶

- Fleet Management for kubernetes a new open source project from the team at Rancher focused on managing fleets of Kubernetes clusters.

- itnext.io: Fleet Management of Kubernetes Clusters at Scale — Rancher’s Fleet

Kontena Pharos¶

- Pharos 🌟 Kubernetes Distribution

- Stateful Kubernetes-In-a-Box with Kontena Pharos

Mirantis Docker Enterprise with Kubernetes and Docker Swarm¶

- Mirantis Docker Enterprise 3.1+ with Kubernetes

- Docker Enterprise 3.1 announced. Features:

- Istio is now built into Docker Enterprise 3.1!

- Comes with Kubernetes 1.17. Kubernetes on Windows capability.

- Enable Istio Ingress for a Kubernetes cluster with the click of a button

- Intelligent defaults to get started quickly

- Virtual services supported out of the box

- Inbuilt support for GPU Orchestration

- Launchpad CLI for Docker Enterprise deployment & upgrades

Cloud Development Kit (CDK) for Kubernetes¶

- cdk8s.io 🌟 Define Kubernetes apps and components using familiar languages. cdk8s is an open-source software development framework for defining Kubernetes applications and reusable abstractions using familiar programming languages and rich object-oriented APIs. cdk8s apps synthesize into standard Kubernetes manifests which can be applied to any Kubernetes cluster.

- github.com/awslabs/cdk8s

AWS Cloud Development Kit (AWS CDK)¶

- AWS: Introducing CDK for Kubernetes 🌟

- Traditionally, Kubernetes applications are defined with human-readable, static YAML data files which developers write and maintain. Building new applications requires writing a good amount of boilerplate config, copying code from other projects, and applying manual tweaks and customizations. As applications evolve and teams grow, these YAML files become harder to manage. Sharing best practices or making updates involves manual changes and complex migrations.

- YAML is an excellent format for describing the desired state of your cluster, but it is does not have primitives for expressing logic and reusable abstractions. There are multiple tools in the Kubernetes ecosystem which attempt to address these gaps in various ways:

- kustomize Customization of kubernetes YAML configurations

- jsonnet data templating language

- jkcfg Configuration as Code with ECMAScript

- kubecfg A tool for managing complex enterprise Kubernetes environments as code.

- kubegen Simple way to describe Kubernetes resources in a structured way, but without new syntax or magic

- Pulumi

- We realized this was exactly the same problem our customers had faced when defining their applications through CloudFormation templates, a problem solved by the AWS Cloud Development Kit (AWS CDK), and that we could apply the same design concepts from the AWS CDK to help all Kubernetes users.

SpringBoot with Docker¶

- spring.io: spring boot with docker

- spring.io: Creating Docker images with Spring Boot 2.3.0.M1

- learnk8s.io: Developing and deploying Spring Boot microservices on Kubernetes

Docker in Docker¶

- Building Docker images when running Jenkins in Kubernetes

- itnext.io: docker in docker

- code-maze.com: ci jenkins docker

- medium: quickstart ci with jenkins and docker in docker

- getintodevops.com: the simplest way to run docker in docker

- Docker in Docker on EKS:

Serverless with OpenFaas and Knative¶

Kubernetes interview questions¶

- Kubernetes Interview Questions and Answers 2019 2020

- intellipaat.com: Top Kubernetes Interview Questions and Answers

Container Ecosystem¶

Container Flowchart¶

- Assess managed Kubernetes services for your workloads. Managed services from cloud providers can simplify Kubernetes deployment but create some snags in a multi-cloud model. Follow three steps to see if these services can benefit you.